The Tech Sales Newsletter #108: Situational Awareness revisited

Source: TBPN

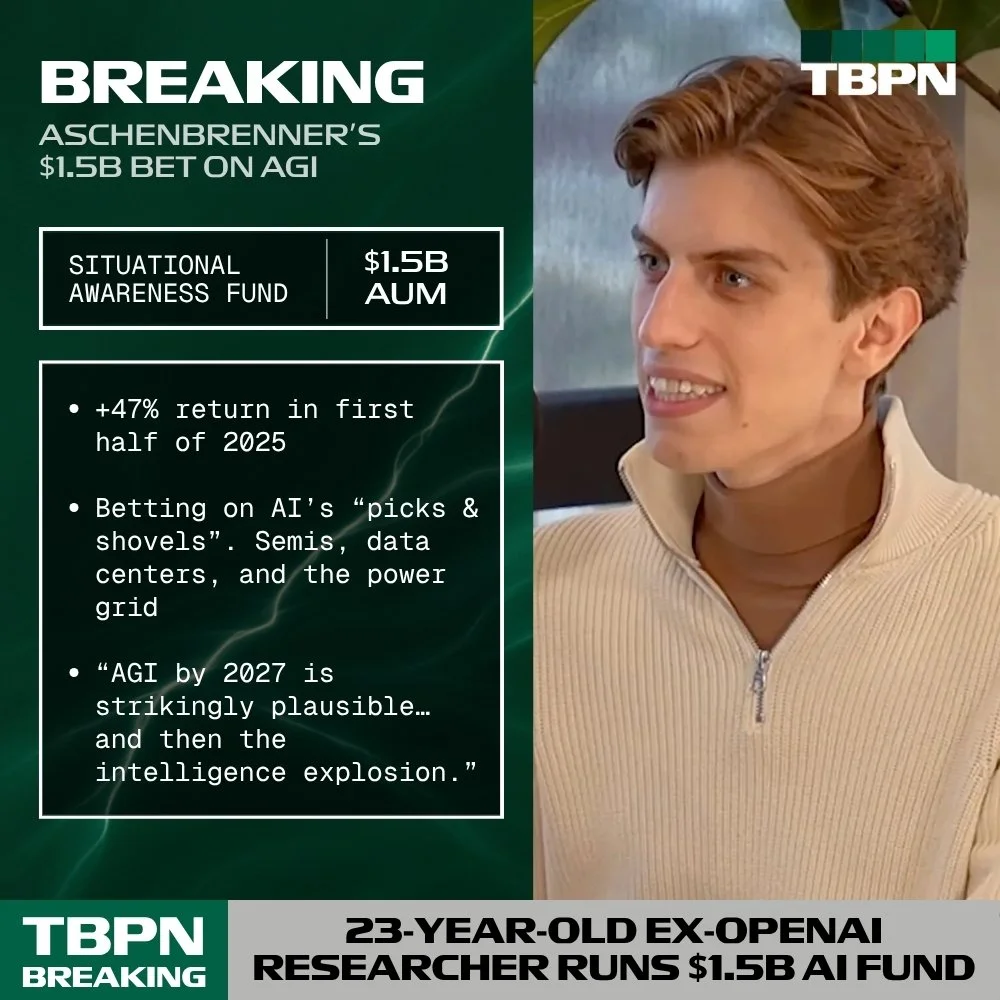

One of the more influential AI whitepapers in the last 24 months came from a rather young OpenAI researcher who outlined a quite lucid roadmap for what the road to AGI looks like and how it's rather obvious that this will become the single most important national security topic in the world.

While he ended up getting fired from OpenAI (allegedly because he had flagged poor cybersecurity practices, which his paper addresses quite extensively), shortly after, he ended up leading a new hedge fund that puts his "Situational Awareness" thesis into actionable investments.

This is a good moment to revisit the paper, which was published back in June 2024, which feels like a decade ago in our new accelerated timeline.

The key takeaway

For tech sales: The biggest challenge in tech sales right now is positioning accurately for the next few years of exponential growth. Cloud infrastructure software (specifically hyperscalers, cybersecurity, and "plumbing" for inference) appears to be mostly safe. Anything else will live and die by the evolution of the frontier models, until we get closer to superintelligence and the economy changes drastically.

For investors: It should be clear by now that we are transitioning to a new type of markets and most players are struggling to adapt their strategy in real time. Depending on the point of view, an acceleration in the development of superintelligence is likely to bring us closer to the techno-capital machine concept, with a significant premium going towards real-world AI and national security use cases.

Situational awareness

Source: SITUATIONAL AWARENESS: The Decade Ahead

AGI by 2027 is strikingly plausible. GPT-2 to GPT-4 took us from ~preschooler to ~smart high-schooler abilities in 4 years. Tracing trendlines in compute (~0.5 orders of magni-tude or OOMs/year), algorithmic efficiencies (~0.5 OOMs/year), and “unhobbling” gains (from chatbot to agent), we should expect another preschooler-to-high-schooler-sized qualitative jump by 2027.

Look. The models, they just want to learn. You have to understand this. The models, they just want to learn.

ilya sutskever

GPT-4’s capabilities came as a shock to many: an AI system that could write code and essays, could reason through difficult math problems, and ace college exams. A few years ago, most thought these were impenetrable walls.

But GPT-4 was merely the continuation of a decade of break-neck progress in deep learning. A decade earlier, models could barely identify simple images of cats and dogs; four years earlier, GPT-2 could barely string together semi-plausible sentences. Now we are rapidly saturating all the benchmarks we can come up with. And yet this dramatic progress has merely been the result of consistent trends in scaling up deep learning.

There have been people who have seen this for far longer. They were scoffed at, but all they did was trust the trendlines. The models, they just want to learn; you scale them up, and they learn more.

I make the following claim: it is strikingly plausible that by 2027, models will be able to do the work of an AI researcher/engineer. That doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph.

It's well known that I like the phrase directionally correct because it can be very revealing about a trend, without needing to have every single directional bet along the way come true.

The compelling argument made here is that between the significant investment of compute and constant iteration on the research side, we will be able to scale the existing models several orders of magnitude (OOM) higher. The paper was written prior to o1 (which was the first reasoning model and released in December 2024) and we already hit a new OOM leap to PhD-level intelligence available in our pocket. The logical conclusion of a new OOM leap is that we might supersede PhD-level intelligence and create sufficient level of autonomy. Autonomy means independent AI agent researchers, scaled to billions of them.

Over and over again, year after year, skeptics have claimed “deep learning won’t be able to do X” and have been quickly proven wrong.

Now the hardest unsolved benchmarks are tests like GPQA, a set of PhD-level biology, chemistry, and physics questions.

Many of the questions read like gibberish to me, and even PhDs in other scientific fields spending 30+ minutes with Google barely score above random chance. Claude 3 Opus currently gets ~60%,9 compared to in domain PhDs who get ~80%—and I expect this benchmark to fall as well, in the next generation or two.

Source: GPQA Diamond Benchmark

Fourteen months later, every version of GPT-5 is able to score higher than the domain-specific PhD average.

Source: SITUATIONAL AWARENESS: The Decade Ahead

While today we are seeing an explosion of highly accurate imaging models like nano-banana, even two years ago it was clear we could generate this level of fidelity, it just required higher levels of computing. A lot of "new big jumps in model performance" is just long-term work that was directionally correct and obvious to anybody involved behind the scenes.

Source: SITUATIONAL AWARENESS: The Decade Ahead

While the explosion of compute availability has been very helpful, efficiency is the other significant lever that we are pulling today. In the imaging example, it didn't make sense to invest massive amounts of compute for image generation, but it does become viable when the algorithms are efficient enough to reduce the total energy footprint needed to offer a service around the model.

All of the labs are rumored to be making massive research bets on new algorithmic improvements or approaches to get around this. Researchers are purportedly trying many strategies, from synthetic data to self-play and RL approaches. Industry insiders seem to be very bullish: Dario Amodei (CEO of Anthropic) recently said on a podcast: “if you look at it very naively we’re not that far from running out of data [. . . ] My guess is that this will not be a blocker [. . . ] There’s just many different ways to do it.”

Of course, any research results on this are proprietary and not being published these days.

In addition to insider bullishness, I think there’s a strong intuitive case for why it should be possible to find ways to train models with much better sample efficiency (algorithmic improvements that let them learn more from limited data). Consider how you or I would learn from a really dense math textbook:

• What a modern LLM does during training is, essentially, very very quickly skim the textbook, the words just flying by, not spending much brain power on it.

• Rather, when you or I read that math textbook, we read a couple pages slowly; then have an internal monologue about the material in our heads and talk about it with a few study-buddies; read another page or two; then try some practice problems, fail, try them again in a different way, get some feedback on those problems, try again until we get a problem right; and so on, until eventually the material “clicks.”

• You or I also wouldn’t learn much at all from a pass through a dense math textbook if all we could do was breeze through it like LLMs.

• But perhaps, then, there are ways to incorporate aspects of how humans would digest a dense math textbook to let the models learn much more from limited data. In a simplified sense, this sort of thing—having an internal monologue about material, having a discussion with a study-buddy, trying and failing at problems un- til it clicks—is what many synthetic data/self-play/RL approaches are trying to do.

Today, all of this has played out. GPT-5’s training used three main stages:

Unsupervised pretraining: The model learned from a massive, multilingual dataset including books, articles, web pages, academic papers, and licensed content. This stage provided the foundational knowledge the model needed.

Supervised fine-tuning (SFT): The model was further trained on datasets curated and labeled by humans, ensuring it could respond accurately to explicit instructions.

Reinforcement learning from human feedback (RLHF): Iterative feedback from human testers was used to refine the model’s decision-making and alignment, optimizing its ability to handle complex queries and avoid unsafe outputs.

The potential efficiencies and changes in techniques were obvious then, but that didn't stop Wall Street from shorting the hyperscalers because "we are stalling progress." The same is playing out today.

As an aside, this also means that we should expect more variance between the different labs in coming years compared to today. Up until recently, the state of the art techniques were published, so everyone was basically doing the same thing. (And new upstarts or open source projects could easily compete with the frontier, since the recipe was published.)

Now, key algorithmic ideas are becoming increasingly proprietary. I’d expect labs’ approaches to diverge much more, and some to make faster progress than others—even a lab that seems on the frontier now could get stuck on the data wall while others make a breakthrough that lets them race ahead. And open source will have a much harder time competing. It will certainly make things interesting. (And if and when a lab figures it out, their breakthrough will be the key to AGI, key to superintelligence—one of the United States’ most prized secrets.)

Today, open source AI is both flourishing and decidedly behind. On one hand, the variety and availability of models is tremendous. On the other hand, for experienced practitioners it's obvious why using Claude Code or GPT-5 Pro is bringing in consistently better results across a variety of tasks. This is the equivalent of two ERP companies offering a similar product "in principle," but one is hiring mostly outsourced contractors and the other one is building an A-team internally. Who wins long term?

Finally, the hardest to quantify—but no less important—category of improvements: what I’ll call “unhobbling.”

Imagine if when asked to solve a hard math problem, you had to instantly answer with the very first thing that came to mind. It seems obvious that you would have a hard time, except for the simplest problems. But until recently, that’s how we had LLMs solve math problems. Instead, most of us work through the problem step-by-step on a scratchpad, and are able to solve much more difficult problems that way.

“Chain-of-thought” prompting unlocked that for LLMs. Despite excellent raw capabilities, they were much worse at math than they could be because they were hobbled in an obvious way, and it took a small algorithmic tweak to unlock much greater capabilities. We’ve made huge strides in “unhobbling” models over the past few years.

RLHF (reinforcement learning from human feedback), Chain of Thought (CoT), scaffolding, tool usage, increased context length, post-training are all techniques that have been used extensively to deliver significantly better performing models and applications. The funny thing is that today we have a similar chatter about "stalled progress" due to AI Agents being difficult to implement and not consistently well performing. All of this will end up being solved (and arguably already it is for leading companies with the relevant know-how).

Source: SITUATIONAL AWARENESS: The Decade Ahead

All together, the path is obvious for multiple Order of Magnitude improvements over a short period of time. Structurally, most of it will come down to simply being able to make the LLMs, which are built on transformer architecture adept at attention, to actually pay attention properly across large context windows.

Source: SITUATIONAL AWARENESS: The Decade Ahead

We are currently barely scratching the surface of a workday to a workweek context window effectively (mostly in coding applications). This will inevitably improve.

Source: SITUATIONAL AWARENESS: The Decade Ahead

The performance jump to GPT-4 was multiple orders of magnitude over 5 years.

Source: SITUATIONAL AWARENESS: The Decade Ahead

So what happens when we equip the LLMs with the right tools and fix their attention across a large context window? What happens when we also start actually training them on specific jobs rather than “general knowledge”?

Source: SITUATIONAL AWARENESS: The Decade Ahead

The reason why these improvements matter is because the logical step over the next few years is getting to AI agentic researchers.

We don’t need to automate everything—just AI research. A common objection to transformative impacts of AGI is that it will be hard for AI to do everything. Look at robotics, for instance, doubters say; that will be a gnarly problem, even if AI is cognitively at the levels of PhDs. Or take automating biology R&D, which might require lots of physical lab work and human experiments.

But we don’t need robotics—we don’t need many things—for AI to automate AI research. The jobs of AI researchers and engineers at leading labs can be done fully virtually and don’t run into real-world bottlenecks in the same way (though it will still be limited by compute, which I’ll address later). And the job of an AI researcher is fairly straightforward, in the grand scheme of things: read ML literature and come up with new questions or ideas, implement experiments to test those ideas, interpret the results, and repeat. This all seems squarely in the domain where simple extrapolations of current AI capabilities could easily take us to or beyond the levels of the best humans by the end of 2027.

It’s worth emphasizing just how straightforward and hacky some of the biggest machine learning breakthroughs of the last decade have been: “oh, just add some normalization” (LayerNorm/BatchNorm) or “do f(x)+x instead of f(x)” (residual connections)” or “fix an implementation bug” (Kaplan → Chinchilla scaling laws). AI research can be automated. And automating AI research is all it takes to kick off extraordinary feedback loops.

This is the most important insight on the game of getting to superintelligence. While the general public is delusional about the capabilities of AI and regular enterprises are still confused about how to leverage the existing technology, the actual insiders simply want to progress on the effective compute curve.

While the current implementations of AI are doing their job of providing revenue and justifying the massive investments made, the reality is that for the frontier labs that want to get to superintelligence, offering a product is simply a means to an end.

Musk demonstrated this with SpaceX, which has a very clear mission to put a colony on Mars. Since the cost of such an endeavor is impossible for a single individual to fund, SpaceX also happens to make money through Starlink. The literal logo of Starlink is the rotation of Earth relevant to Mars and the ideal travel path between the two planets.

Similarly to this, the frontier labs are essentially playing a game of default alive, trying to productize the models they create until they can get to an advanced enough model that can serve as an agentic AI researcher. For somebody like Musk, for example, the moment there is a clear path to superintelligence we will probably see all Grok infra be allocated towards that compute. The integrations on X and the companions might be still delivered through a third party inference service, but the actual focus and compute that Musk controls will go towards that singular goal.

It's not for no reason that we are not seeing any "products" from Ilya, one of the most influential figures of his generation in the research community. This is the mission statement of "Safe Superintelligence," the new company that Ilya founded:

Building safe superintelligence (SSI) is the most important technical problem of our time.

We have started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence.

It’s called Safe Superintelligence Inc.

SSI is our mission, our name, and our entire product roadmap, because it is our sole focus. Our team, investors, and business model are all aligned to achieve SSI.

We approach safety and capabilities in tandem, as technical problems to be solved through revolutionary engineering and scientific breakthroughs. We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.

This way, we can scale in peace.

Our singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures.

As a counterpoint, we should account for Mira Murati, who is clearly going in a different direction with her own company "Thinking Machines":

Emphasis on human-AI collaboration. Instead of focusing solely on making fully autonomous AI systems, we are excited to build multimodal systems that work with people collaboratively.

More flexible, adaptable, and personalized AI systems. We see enormous potential for AI to help in every field of work. While current systems excel at programming and mathematics, we're building AI that can adapt to the full spectrum of human expertise and enable a broader spectrum of applications.

The difference here is that Mira has always been a product-first leader, rather than an AI researcher; she is also more bearish on the concept and viability of superintelligence, looking at it as a potential outcome over multiple decades, rather than the next 5-10 years. Coming back to our friend Leopold's writing.

Source: SITUATIONAL AWARENESS: The Decade Ahead

In the intelligence explosion, explosive progress was initially only in the narrow domain of automated AI research. As we get superintelligence, and apply our billions of (now superintelligent) agents to R&D across many fields, I expect explosive progress to broaden:

An AI capabilities explosion. Perhaps our initial AGIs had limitations that prevented them fully automating work in some other domains (rather than just in the AI research domain); automated AI research will quickly solve these, enabling automation of any and all cognitive work.

Solve robotics. Superintelligence won’t stay purely cognitive for long. Getting robotics to work well is primarily an ML algorithms problem (rather than a hardware problem), and our automated AI researchers will likely be able to solve it (more below). Factories would go from human-run, to AI-directed using human physical labor, to soon being fully run by swarms of robots.

Dramatically accelerate scientific and technological progress. Yes, Einstein alone couldn’t develop neuroscience and build a semiconductor industry, but a billion superintelligent automated scientists, engineers, technologists, and robot technicians (with the robots moving at 10x or more human speed!) would make extraordinary advances in many fields in the space of years. (Here’s a nice short story visualizing what AI-driven R&D might look like.) The billion superintelligences would be able to compress the R&D effort humans researchers would have done in the next century into years. Imagine if the technological progress of the 20th century were compressed into less than a decade. We would have gone from flying being thought a mirage, to airplanes, to a man on the moon and ICBMs in a matter of years. This is what I expect the 2030s to look like across science and technology.

An industrial and economic explosion. Extremely accelerated technological progress, combined with the ability to automate all human labor, could dramatically accelerate economic growth (think: self-replicating robot factories quickly covering all of the Nevada desert). The increase in growth probably wouldn’t just be from 2%/year to 2.5%/year; rather, this would be a fundamental shift in the growth regime, more comparable to the historical step-change from very slow growth to a couple percent a year with the industrial revolution. We could see economic growth rates of 30%/year and beyond, quite possibly multiple doublings a year. This follows fairly straightforwardly from economists’ models of economic growth. To be sure, this may well be delayed by societal frictions; arcane regulation might ensure lawyers and doctors still need to be human, even if AI systems were much better at those jobs; surely sand will be thrown into the gears of rapidly expanding robo-factories as society resists the pace of change; and perhaps we’ll want to retain human nannies; all of which would slow the growth of the overall GDP statistics. Still, in whatever domains we remove human-created barriers (e.g., competition might force us to do so for military production), we’d see an industrial explosion.

Provide a decisive and overwhelming military advantage. Even early cognitive superintelligence might be enough here; perhaps some superhuman hacking scheme can deactivate adversary militaries. In any case, military power and technological progress has been tightly linked historically, and with extraordinarily rapid technological progress will come concomitant military revolutions. The drone swarms and roboarmies will be a big deal, but they are just the beginning; we should expect completely new kinds of weapons, from novel WMDs to invulnerable laser-based missile defense to things we can’t yet fathom. Compared to pre-superintelligence arsenals, it’ll be like 21st century militaries fighting a 19th century brigade of horses and bayonets. (I discuss how superintelligence could lead to a decisive military advantage in a later piece.)

Be able to overthrow the US government. Whoever controls superintelligence will quite possibly have enough power to seize control from pre-superintelligence forces. Even without robots, the small civilization of superintelligences would be able to hack any undefended military, election, television, etc. system, cunningly persuade generals and electorates, economically outcompete nation-states, design new synthetic bioweapons and then pay a human in bitcoin to synthesize it, and so on. In the early 1500s, Cortes and about 500 Spaniards conquered the Aztec empire of several million; Pizarro and ~300 Spaniards conquered the Inca empire of several million; Alfonso and ~1000 Portuguese conquered the Indian Ocean. They didn’t have god-like power, but the Old World’s technological edge and an advantage in strategic and diplomatic cunning led to an utterly decisive advantage. Superintelligence might look similar.

The overall idea here is that when most of us try to focus on the idea of big leaps in AI capabilities, we do so from the perspective of the domains we are familiar with. Quick change across multiple domains would lead to significant chain reactions that would have unpredictable effects across the ecosystem.

AI security is about securing the nation

Now you might notice that the tone has shifted from "here is some extra intelligence oomph" to "those that control ASI will rule the world."

On the current course, the leading Chinese AGI labs won’t be in Beijing or Shanghai—they’ll be in San Francisco and London. In a few years, it will be clear that the AGI secrets are the United States’ most important national defense secrets—deserving treatment on par with B-21 bomber or Columbia-class submarine blueprints, let alone the proverbial “nuclear secrets”—but today, we are treating them the way we would random SaaS software. At this rate, we’re basically just handing superintelligence to the CCP.

All the trillions we will invest, the mobilization of American industrial might, the efforts of our brightest minds—none of that matters if China or others can simply steal the model weights (all a finished AI model is, all AGI will be, is a large file on a computer) or key algorithmic secrets (the key technical breakthroughs necessary to build AGI).

America’s leading AI labs self-proclaim to be building AGI: they believe that the technology they are building will, before the decade is out, be the most powerful weapon America has ever built. But they do not treat it as such. They measure their security efforts against “random tech startups,” not “key national defense projects.” As the AGI race intensifies—as it becomes clear that superintelligence will be utterly decisive in international military competition—we will have to face the full force of foreign espionage. Currently, labs are barely able to defend against scriptkiddies, let alone have “North Korea-proof security,” let alone be ready to face the Chinese Ministry of State Security bringing its full force to bear.

And this won’t just matter years in the future. Sure, who cares if GPT-4 weights are stolen—what really matters in terms of weight security is that we can secure the AGI weights down the line, so we have a few years, you might say. (Though if we’re building AGI in 2027, we really have to get moving!) But the AI labs are developing the algorithmic secrets—the key technical breakthroughs, the blueprints so to speak—for the AGI right now (in particular, the RL/self-play/synthetic data/etc “next paradigm” after LLMs to get past the data wall). AGI-level security for algorithmic secrets is necessary years before AGI-level security for weights. These algorithmic breakthroughs will matter more than a 10x or 100x larger cluster in a few years—this is a much bigger deal than export controls on compute, which the USG has been (presciently!) intensely pursuing. Right now, you needn’t even mount a dramatic espionage operation to steal these secrets: just go to any SF party or look through the office windows.

Our failure today will be irreversible soon: in the next 12-24 months, we will leak key AGI breakthroughs to the CCP. It will be the national security establishment’s single greatest regret before the decade is out.

It's not difficult to see why Leopold actually was able to turn his AI research career into leading a large hedge fund. Some of the most interesting points in Situational Awareness expand beyond the dynamics of AI and into realpolitik. More importantly, he contrasts it well with the history of the most defining technology race of the last century.

When it first became clear to a few that an atomic bomb was possible, secrecy, too, was perhaps the most contentious issue. In 1939 and 1940, Leo Szilard became known “throughout the American physics community as the leading apostle of secrecy in fission matters.” But he was rebuffed by most; secrecy was not at all something scientists were used to, and it ran counter to many of their basic instincts of open science. But it slowly became clear what had to be done: the military potential of this research was too great for it to simply be freely shared with the Nazis. And secrecy was finally imposed, just in time.

In the fall of 1940, Fermi had finished new carbon absorption measurements on graphite, suggesting graphite was a viable moderator for a bomb. Szilard assaulted Fermi with yet another secrecy appeal. “At this time Fermi really lost his temper; he really thought this was absurd,” Szilard recounted. Luckily, further appeals were eventually successful, and Fermi reluctantly refrained from publishing his graphite results.

At the same time, the German project had narrowed down on two possible moderator materials: graphite and heavy water. In early 1941 at Heidelberg, Walther Bothe made an incorrect measurement on the absorption cross-section of graphite, and concluded that graphite would absorb too many neutrons to sustain a chain reaction. Since Fermi had kept his result secret, the Germans did not have Fermi’s measurements to check against, and to correct the error. This was crucial: it led the German project to pursue heavy water instead—a decisive wrong path that ultimately doomed the German nuclear weapons effort.

If not for that last-minute secrecy appeal, the German bomb project may have been a much more formidable competitor—and history might have turned out very differently.

The interesting thing about world-changing research and inventions is that a) most don't believe in it until it's done b) the same personality that would keep persisting with trying to achieve the outcome would be too occupied by the mission over practical concerns.

It takes one look at the semiconductor industry and the history of how ASML (the only producer of EUV machines in the world, i.e., the AI technology printer) to see these dynamics playing out. In 2015, ASML was maintaining an almost skeleton cybersecurity crew when they found out that Chinese threat actors had infiltrated their systems. This was during a critical moment with the ramp-up for launching EUV machines to the market after close to 20 years of complicated research progress. Shortly after, the Dutch security agencies intervened and helped set up what is today seen as the best cybersecurity teams in the industry.

Source: Elon Musk commenting on the lawsuit xAI filed against an ex-researcher

While the frontier labs have made progress on these elements since this was published, by and large, organizations like OpenAI and Anthropic require a very different model of operation than the current way of working. Just this week xAI had a significant security breach when a researcher allegedly exported their codebase and tried to bring it over to OpenAI (which is almost certainly going to get him fired immediately there, so the whole "uploaded database" story doesn't make a lot of sense). The obvious question is why wouldn't this Chinese researcher have already shared xAI's research to other third parties already (hint, he probably did).

A lead of a year or two or three on superintelligence could mean as utterly decisive a military advantage as the US coalition had against Iraq in the Gulf War. A complete reshaping of the military balance of power will be on the line.

Imagine if we had gone through the military technological developments of the 20th century in less than a decade. We’d have gone from horses and rifles and trenches, to modern tank armies, in a couple years; to armadas of supersonic fighter planes and nuclear weapons and ICBMs a couple years after that; to stealth and precision that can knock out an enemy before they even know you’re there another couple years after that.

That is the situation we will face with the advent of superintelligence: the military technological advances of a century compressed to less than a decade. We’ll see superhuman hacking that can cripple much of an adversary’s military force, roboarmies and autonomous drone swarms, but more importantly completely new paradigms we can’t yet begin to imagine, and the inventions of new WMDs with thousandfold increases in destructive power (and new WMD defenses too, like impenetrable missile defense, that rapidly and repeatedly upend deterrence equilibria).

And it wouldn’t just be technological progress. As we solve robotics, labor will become fully automated, enabling a broader industrial and economic explosion, too. It is plausible growth rates could go into the 10s of percent a year; within at most a decade, the GDP of those with the lead would trounce those behind. Rapidly multiplying robot factories would mean not only a drastic technological edge, but also production capacity to dominate in pure materiel. Think millions of missile interceptors; billions of drones; and so on.

Of course, we don’t know the limits of science and the many frictions that could slow things down. But no godlike advances are necessary for a decisive military advantage. And a billion superintelligent scientists will be able to do a lot. It seems clear that within a matter of years, pre-superintelligence militaries would become hopelessly outclassed.

Highly advanced software systems, combined with large quantities of robotic hardware (drones, for example), are likely going to dominate the battlefield of the future. From a recent article at Arena Magazine:

Erik Prince, founder of private defense firm Blackwater, told me drones are "the biggest disruption to warfare since Genghis Khan put stirrups on horses." DIY drones now inflict about 70% of all casualties in Ukraine. Drones destroy more armored vehicles in Ukraine than all traditional weapons combined. Ukrainian soldiers duct-tape grenades to cheap quadcopters that streak across enemy lines to destroy tanks worth 50,000 times more.

"Drones are fundamentally an offensive weapon," Scott tells me. Nathan adds: "the same precision strike capabilities only great nation states could afford to build are now available to any hobbyist—or terrorist-with an Amazon account."

That asymmetry is a problem for America's long-dominant military. Nathan considers Martin Gurri's 2014 book The Revolt of the Public "the most important book so far in the 21st century." Required reading among tech elites like Marc Andreessen, Gurri's book explains how the digital revolution shattered elite authority and disrupted top-down, centralized, well-funded incumbents in media, politics, and business.

And now, war. Ukraine leaves no question that conventional military power is going obsolete. America shipped 31 Abrams tanks to Ukraine, costing $10 million each. 19 have been destroyed or disabled - many taken out by drones costing less than a high-end smartphone. The remaining tanks were pulled off the front lines.

"In Ukraine we're launching $6 million missiles to take out $20,000 drones," Nathan adds. "1954 called and they want their solution back. CX2 exists to bring us back to cost parity."

The US military remains stuck building big, expensive, exquisite weapons systems. Gerald R. Ford-class aircraft carriers cost $13 billion each, after $37 billion in R&D. The Navy produces about one every five years.

Imagine that carrier facing a swarm of 1,000 cheap grenade-laden drones. There's only one winner. Nathan quotes Stalin: "Quantity has a quality all of its own."

Now combine this with cybersecurity warfare and persuasive recruitment of intelligence assets on the ground in order to conduct espionage and sabotage. If Israel proved anything in the Twelve-Day War this summer, if there is the political will to pursue all possible military scenarios, the outcomes can be devastating for your opponent.

What happens when the will to, no pun intended, execute is combined with the capabilities of superintelligence? Which brings us to the Project.

Many plans for “AI governance” are put forth these days, from licensing frontier AI systems to safety standards to a public cloud with a few hundred million in compute for academics. These seem well-intentioned—but to me, it seems like they are making a category error.

I find it an insane proposition that the US government will let a random SF startup develop superintelligence. Imagine if we had developed atomic bombs by letting Uber just improvise.

Superintelligence—AI systems much smarter than humans—will have vast power, from developing novel weaponry to driving an explosion in economic growth. Superintelligence will be the locus of international competition; a lead of months potentially decisive in military conflict.

It is a delusion of those who have unconsciously internalized our brief respite from history that this will not summon more primordial forces. Like many scientists before us, the great minds of San Francisco hope that they can control the destiny of the demon they are birthing. Right now, they still can; for they are among the few with situational awareness, who understand what they are building. But in the next few years, the world will wake up. So too will the national security state. History will make a triumphant return.

As in many times before—Covid, WWII—it will seem as though the United States is asleep at the wheel—before, all at once, the government shifts into gear in the most extraordinary fashion. There will be a moment—in just a few years, just a couple more “2023-level” leaps in model capabilities and AI discourse—where it will be clear: we are on the cusp of AGI, and superintelligence shortly thereafter. While there’s a lot of flux within the exact mechanics, one way or another, the USG will be at the helm; the leading labs will (“voluntarily”) merge; Congress will appropriate trillions for chips and power; a coalition of democracies formed.

Startups are great for many things—but a startup on its own is simply not equipped for being in charge of the United States’ most important national defense project. We will need government involvement to have even a hope of defending against the all-out espionage threat we will face; the private AI efforts might as well be directly delivering superintelligence to the CCP. We will need the government to ensure even a semblance of a sane chain of command; you can’t have random CEOs (or random nonprofit boards) with the nuclear button. We will need the government to manage the severe safety challenges of superintelligence, to manage the fog of war of the intelligence explosion. We will need the government to deploy superintelligence to defend against whatever extreme threats unfold, to make it through the extraordinarily volatile and destabilized international situation that will follow. We will need the government to mobilize a democratic coalition to win the race with authoritarian powers, and forge (and enforce) a nonproliferation regime for the rest of the world. I wish it weren’t this way—but we will need the government. (Yes, regardless of the Administration.)

It's probably not a coincidence that the 3 individuals with the most conviction and capital today to reach superintelligence are Elon Musk, Sam Altman, and Mark Zuckerberg. In particular, Musk has already passed the boundaries of "just being a businessman" and has a significant portion of his business be intertwined with the US government. Altman will follow whatever he is told (i.e., it's unlikely he will go founder mode trying to take AGI researchers away in case of a government takeover) and Zuckerberg is definitely politically aware/willing to navigate a change in dynamics.

In a way, an argument can be made that all 3 of them would expect for the Project to become part of the national agenda and are positioning themselves accordingly.

Source: SITUATIONAL AWARENESS: The Decade Ahead

And so by 27/28, the endgame will be on. By 28/29 the intelligence explosion will be underway; by 2030, we will have summoned superintelligence, in all its power and might.

Whoever they put in charge of The Project is going to have a hell of a task: to build AGI, and to build it fast; to put the American economy on wartime footing to make hundreds of millions of GPUs; to lock it all down, weed out the spies, and fend off all-out attacks by the CCP; to somehow manage a hundred million AGIs furiously automating AI research, making a decade’s leaps in a year, and soon producing AI systems vastly smarter than the smartest humans; to somehow keep things together enough that this doesn’t go off the rails and produce rogue superintelligence that tries to seize control from its human overseers; to use those superintelligences to develop whatever new technologies will be necessary to stabilize the situation and stay ahead of adversaries, rapidly remaking US forces to integrate those; all while navigating what will likely be the tensest international situation ever seen. They better be good, I’ll say that.

For those of us who get the call to come along for the ride, it’ll be . . . stressful. But it will be our duty to serve the free world—and all of humanity. If we make it through and get to look back on those years, it will be the most important thing we ever did. And while whatever secure facility they find probably won’t have the pleasantries of today’s ridiculously-overcomped-AI-researcher-lifestyle, it won’t be so bad. SF already feels like a peculiar AI-researcher-college-town; probably this won’t be so different. It’ll be the same weirdly-small circle sweating the scaling curves during the day and hanging out over the weekend, kibitzing over AGI and the lab-politics-of-the-day.

Except, well—the stakes will be all too real.

See you in the desert, friends.

In the 15 months since it was published, Situational Awareness has closely tracked with the ideas outlined in it, while Leopold was also able to monetize exceptionally well the short-term game plan.

While it's unlikely it will age "perfectly" over the next few years (i.e., the type of acceleration he indicates as early as 2027 is much more likely somewhere between 2030 and 2035), it's one of our best directionally accurate deep dives done by insiders. The question is how are you aligning towards the AGI trend?