The Tech Sales Newsletter #104: So is GCP undervalued?

There is a lot of focus these days on the performance of the "MAG7" tech companies, the largest and most successful players by market capitalization. Since basically all of them are directly tied to the AI opportunity and have large tech sales teams (except Apple, bless their heart), it's logical to ask questions such as 'where can I get the best value?'

Alphabet (and in this context GCP) is often flagged as having the highest potential relative to its current valuation. Let's explore this thesis.

The key takeaway

For tech sales: GCP is still being mostly sold as the underdog, at a time when their overall value proposition is clearly creating an opportunity to become the biggest player in the space. You can either sell with that vision in mind and drive large consolidations, or remain a "let's discount and be thankful they pay attention to us" player.

For investors: Alphabet appears to be temporarily mispriced because the market can't see beyond the dying cash cow of search. Knowing how the cloud business of Amazon and Microsoft ended up overtaking their original businesses, it appears that GCP+Gemini will follow the same cycle. The risk here is whether they can become the number one AI player given that they own the full stack, but at these valuations, investors are getting the AI transformation at a steep discount.

Q2 Vibe check

I’ll briefly touch on the AI stack before turning to quarterly highlights.

First, AI infrastructure. We operate the leading global network of AI-optimized data centers and cloud regions.

We also offer the industry’s widest range of TPUs and GPUs, along with storage and software built on top. That’s why nearly all gen AI unicorns use Google Cloud. And it’s why a growing number, including leading AI research labs, like Safe Super Intelligence and Physical Intelligence, use TPUs specifically. Our AI infrastructure investments are crucial to meeting the growth in demand from Cloud customers.

Next, world-class AI research, including models and tooling.

We continue to expand our Gemini 2.5 family of hybrid reasoning models, which provide industry-leading performance in nearly every major benchmark.

In addition to improving our popular workhorse model Flash, we debuted an extremely fast ‘Flash-Lite’ version.

We achieved gold-medal level performance in the International Math Olympiad, using an advanced version of Gemini with Deep Think. We can’t wait to bring Deep Think to users soon.

We have some of the best models available today at every price point. Our 2.5 models have been a catalyst for growth, and nine million developers have now built with Gemini.

I also want to mention Veo 3, our state-of-the-art video generation model. It’s been a viral hit, with people sharing clips created in the Gemini app, and with our new AI filmmaking tool, Flow. Since May, over 70 million videos have been generated using Veo 3.

And we recently introduced a feature in the Gemini app to turn photos into videos, which people absolutely love. It’s also rolling out to Google Photos users starting today.

Third, our products and platforms. We are bringing AI to all our users and partners through surfaces like Workspace, Chrome, and more. The growth in usage has been incredible.

At I/O in May, we announced that we processed 480 trillion monthly tokens across our surfaces. Since then, we have doubled that number now processing over 980 trillion monthly tokens, a remarkable increase.

The Gemini app now has more than 450 million monthly active users and we continue to see strong growth and engagement, with daily requests growing over 50% from Q1.

In June alone, over 50 million people used AI-powered meeting notes in Google Meet.

When evaluating GCP, it's difficult to ignore the overall situation at Alphabet, as investment and attention are interconnected. A number of important IT decisions can also be influenced by the ecosystem benefit. For example, a large Workspace customer might see onboarding onto Vertex AI or Wiz as a logical step in their expanded relationship.

At the core of the AI play for Alphabet as a company is the need for Gemini to succeed, both in Enterprise and the consumer space. For this to work, you need to essentially achieve 3 goals:

Deliver outstanding customer experiences in your consumer-facing applications (i.e., Search).

Extend adoption of AI features within Android and among regular users of Workspace products.

Offer best-in-class developer tools for growing GCP market share.

Source: Gainify

The reason why Alphabet is perceived as undervalued is straightforward: the stock's price-to-earnings ratio is 47% lower than the average of the MAG7 and remains lower even than the average for the S&P 500. What is the primary reason for this?

First up, this is an incredibly exciting moment for Search. We see AI powering an expansion in how people are searching for and accessing information, unlocking completely new kinds of questions you can ask Google.

Overall queries and commercial queries on Search continued to grow year-over-year. And our new AI experiences significantly contributed to this increase in usage.

We are also seeing that our AI features cause users to search more as they learn that Search can meet more of their needs. That’s especially true for younger users.

Let me go deeper on our new Search experiences.

We know how popular AI Overviews are because they are now driving over 10% more queries globally for the types of queries that show them, and this growth continues to increase over time. AI Overviews are now powered by Gemini 2.5, delivering the fastest AI responses in the industry.

We also saw strong growth in the use of multimodal Search, particularly the combination of Lens or Circle to Search, together with AI Overviews. This growth was most pronounced among younger users.

Our new end-to-end AI Search experience, AI Mode, continues to receive very positive feedback, particularly for longer and more complex questions. It’s still rolling out but already has over 100 million monthly active users in the U.S. and India.

We plan to keep enhancing the AI Mode experience for users by shipping great features, fast. That includes our advanced research tool Deep Search and more personalized responses.

The bear thesis for Alphabet is very straightforward: Search is the biggest revenue driver and consumers are spending more time using a variety of alternative applications (i.e., ChatGPT) to find information and products they care about. This means that even if Alphabet is able to pull back that interest over time to its own AI-powered applications, it will require more compute to convert those users due to inference cost. Essentially, the margin advantage that had powered the growth of Alphabet over the years into one of the best businesses ever created can no longer be maintained.

Some of this is also their own fault, as Google Search became a progressively worse product over the years and part of the big adoption of LLMs for searching was also driven by deep dissatisfaction with the experience offered by Alphabet.

Next, Google Cloud.

We see strong customer demand, driven by our product differentiation and our comprehensive AI product portfolio. Four stats show this.

One, the number of deals over $250 million, doubling year-over-year.

Two, in the first half of 2025, we signed the same number of deals over $1 billion that we did in all of 2024.

Three, the number of new GCP customers increased by nearly 28% quarter-over-quarter.

Four, more than 85,000 enterprises including: LVMH, Salesforce, and Singapore’s DBS Bank now build with Gemini, driving a 35x growth in Gemini usage year-over-year.

Our models are served on our AI infrastructure, which offers industry-leading performance and cost efficiency for both training and inference.

Along with our AI accelerators, we introduced new innovations in storage, including Anywhere Cache, which improves inference latency by up to 70%; and Rapid Storage, which delivers a 5x improvement in latency compared to leading hyperscalers.

In addition, we have optimized AI software packages, including PyTorch and JAX, with full open source supports for various AI training and serving demands.

We have also integrated AI agents deeply into each of our Cloud products.

Wayfair is leveraging our databases integrated with AI to streamline data pipelines and deliver more personalized customer experiences.

Mattel is leveraging our Gemini-powered Data Agents and Big Query to review and act on product feedback more quickly.

Target is using our Gemini-powered Threat Intelligence and Security Operations Agents to improve cybersecurity.

Capgemini is utilizing our AI Software Engineering Agents to deliver higher quality software faster by automating tasks, from code generation to testing.

And BBVA says Gemini in Google Workspace is saving employees nearly three hours per week by automating repetitive tasks. It is now rolling it out to 100,000 employees globally.

We are also focused on building a flourishing AI agent ecosystem.

We introduced an open source Agent Development Kit which now has over a million downloads in less than four months.

We also introduced Agentspace, an open and interoperable enterprise chat, search and agent platform. Gordon Food Service is bringing Agentspace to its U.S. employees, which is enabling better, more efficient decision-making. And over one million subscriptions have been booked for Agentspace ahead of its general availability.

The big difference between the AI community on X and Enterprise is the scale of adoption and how "loud" users can be. The deal they are mentioning for Target is either a brand new win for their SIEM platform (Google SecOps) or an upsell to now include the Gemini agent for Threat Intelligence and SecOps. With the recent acquisition of Wiz, Google actually sits on quite a formidable security operations portfolio, between Mandiant threat feeds, the native telemetry ingestion and alerting, case management, agentic workflows powered by a top 3 model research lab, and now cloud security visualization and remediation.

For such a comprehensive and cutting edge platform, you would think that people would bother to discuss it. In the last 7 days on X, there have been 11 posts, all of them spam/automated corpo stuff.

There is a good reason, of course, why Google SecOps adoption could be slow: for you to benefit the most from it, you should be running your full IT stack on GCP. This is why Enterprise sales execution becomes critical, the ability to drive massive consolidation of a customer toward your service, something highlighted with the "deals above $250M" statement in the beginning.

Last month, Waymo launched in Atlanta, more than doubled its Austin service territory, and expanded its Los Angeles and San Francisco Bay Area territories by approximately 50%.

Waymo also launched Teen Accounts, starting with riders aged 14-17 in Phoenix.

Overall, great momentum here. The Waymo Driver has now autonomously driven over 100 million miles on public roads. And the team is testing across more than ten cities this year, including New York and Philadelphia. We hope to serve riders in all ten in the future.

Same story repeats itself with Waymo, the only viable alternative to Tesla for autonomous driving on the market. So far, the project has been a resounding success, yet it lags in visibility compared to Tesla Autopilot.

Source: X Radar

While the gap doesn't seem as big, a lot of the posts are actually coming from the Tesla camp and highlight the preference over Waymo (which then gets mentioned).

Attention and traction matter, particularly when building a new customer category where users will end up asking themselves "do I want to call a Robotaxi now or a Waymo?" Losing the mindshare lead, even if on parity technology-wise, is a disaster in the making.

Turning to the Google Cloud segment, which delivered very strong results this quarter. Revenues increased by 32% to $13.6 billion in the second quarter, reflecting growth in GCP across Core and AI products, at the rate that was much higher than Cloud’s overall revenue growth, and growth in Google Workspace driven by an increase in average revenue per seat and the number of seats.

Google Cloud operating income increased to $2.8 billion, and operating margin increased from 11.3% to 20.7%. The expansion in Cloud operating margin was driven by strong revenue performance and continued efficiencies in our expense base, partially offset by higher technical infrastructure usage costs, which includes the associated depreciation.

As we ramp our AI investments, we continue to focus on driving improvements in productivity and efficiency to offset growth in technical infrastructure related expenses, particularly from higher depreciation.

Google Cloud backlog increased 18% sequentially in Q2 and 38% year-over-year, reaching $106 billion at the end of the quarter. This growth was driven by strong demand for our products and services from both new and existing customers.

As Sundar mentioned, we have signed multiple billion-dollar-plus deals in the first half of the year.

Still, GCP had their best quarter in... ever.

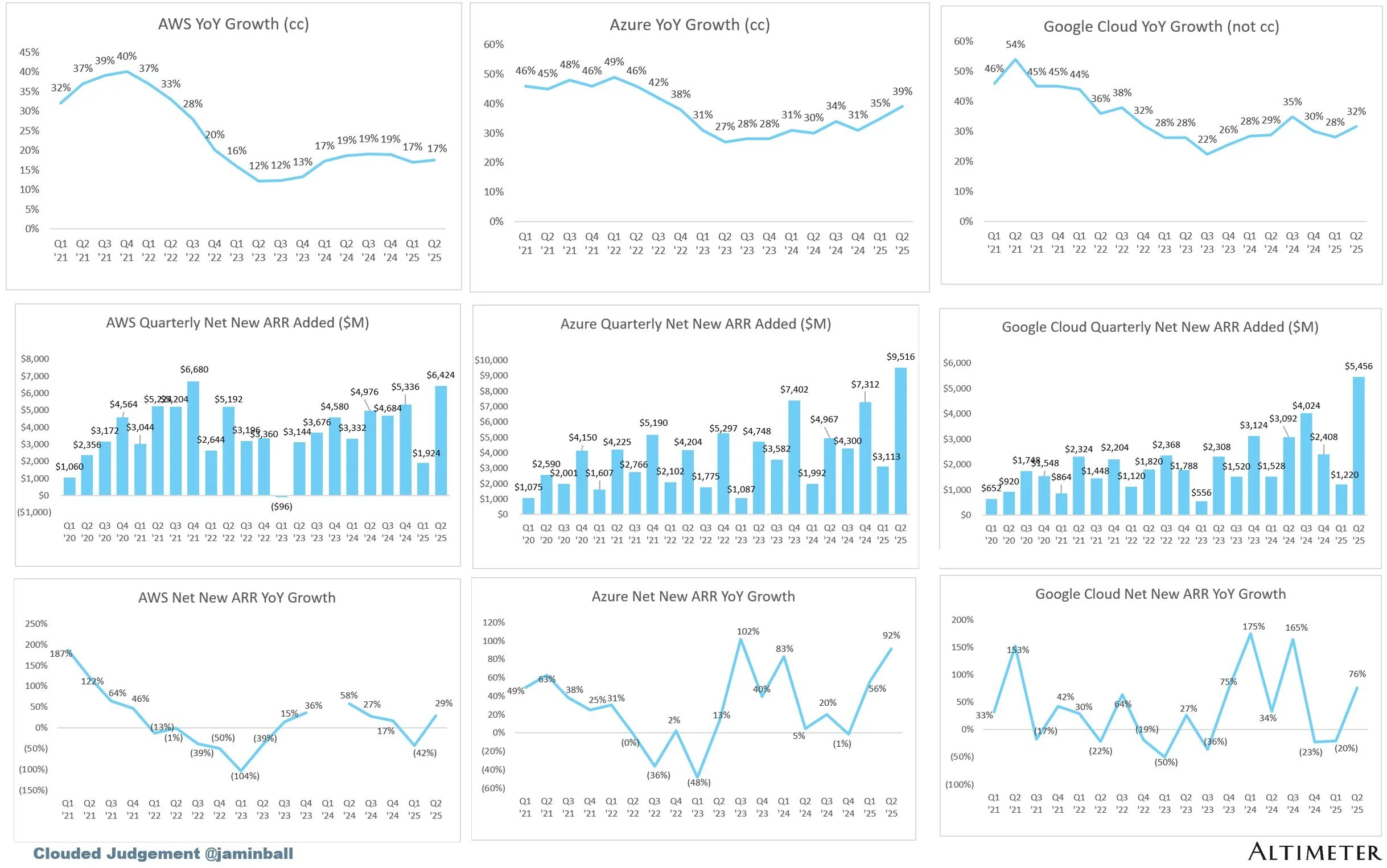

Source: Jamin Ball on X

While still completely outplayed by the Azure team, they are now touching AWS's net-new ARR levels of business, which is clearly a success by any metric imaginable.

Brian Nowak (Morgan Stanley):

The first one, Sundar, there’s a lot of discussion about agentic search for commercial activities and agents that can be broadly deployed. Maybe, can you just, from a technology perspective, when you sit down with the engineering teams working on some of these new agentic capabilities that could come, what are some of the predominant technological hurdles that you think need to be cleared in order to launch scalable agents for commercial queries, is the first one.

Sundar Pichai, CEO, Alphabet and Google:

Look, overall, we are definitely, in many ways, when we built our series of 2.5 models, particularly with Pro, et cetera, it’s the direction where we are investing the most. There’s definitely exciting progress, including in the models we haven’t fully released yet.

The main gaps we are all trying to do is; you’re obviously chaining a sequence of events, and so, being able to do it reliably; the latency compounds, the cost compounds. And being able to do it reliably in a way for the users. All of this comes together. In each of this, we are making progress and it all needs to kind of hang together.

The good news is we are making robust progress. We think we are at the frontier there.

In all of these areas, when you look back in a 12-month basis, you end up making the models much more efficient for any given capabilities. So, the forward-looking trajectory, I think, will really unlock these agentic experiences. We see the potential of being able to do them, but they are a bit slow and costly and take time, and sometimes are brittle, right? But they’re making progress on all of that.

And I think that’s what we’ll really unlock, and I expect 2026 to be the year in which people kind of use agentic experiences more broadly. And so, it’s an exciting opportunity ahead.

Interestingly enough, this highlights one of the structural challenges right now for AI Agents, which is both performance and efficiency. While a lot of companies are getting excited about the big picture outcome, right now agentic workflows, when done right, mean additional layers of steps, which all consume compute. This increases the cost per activity without structurally guaranteeing the outcome due to hallucinations, errors, latency, etc. Failed queries lead to the whole chain of activity restarting again, burning tokens.

For most use cases right now, we need a step up in both performance and efficiency at scale, essentially make the smaller models smarter in order to scale easier, then for really complex but high-value activities, improve outcomes.

Source: Gemini CLI page.

This challenge is best visualized with the interesting launch of Gemini CLI. For the more technically challenged readers:

CLI stands for command line interface, which most people would access through Terminal on their device.

Developers have in more recent years migrated to interfaces within applications (for example, Cursor), but CLIs are making a return due to making it easy to run a variety of tools within the same overview.

Claude Code is the "breakout" star of Enterprise oriented adoption this year in AI, and while it can run in a variety of other applications, most usage is happening within the terminal.

Gemini CLI is basically a competitor, powered by the Gemini models. In theory, this should have been an easy win.

As with any other Google product, however, things did not play out that well. The primary issue is that while Gemini benchmarks really well on coding tasks and has a very large context window (i.e., it's very smart and should remember a lot of things), in practice it's struggling with doing this as an agentic companion. The model often forgets things within the same session, and what's worse, it is struggling to use other tools.

Tool calling is Anthropic's bread and butter because it works almost all of the time. This means that you can reliably have the coding agent interact with other applications and APIs, extracting important data or making changes effectively.

So when Sundar talks about "oh, next year is the year of the agents," what that really means from his perspective is that Gemini 3.0 needs to perform better and scale more efficiently across their infrastructure. This is particularly important due to the business realities of models:

Research lab trains a model and spends Y dollars on it last year.

This year the product and sales teams monetize the model across the estate for hopefully Y + profit.

In parallel, the research lab is training a new model this year, which likely cost 2x or 3x Y.

Next year, the current model will be retired (completing its revenue added-value) and the new model needs to monetize at least its training cost + profit.

Where research labs need to get to in order to be profitable is a situation where they can monetize models for longer before they need to spend massive R&D costs on the next model. Most importantly, if they do spend a lot of R&D, then the updated model also needs to be better in order to recoup the investment.

The reason why Google is the best positioned company in this little game of hot potato is because they can productize new models across a much bigger surface and drive revenue from the model.

The reason why OpenAI is going so hard directly against them is because their best bet is to essentially outplay Google on its own turf, potentially capturing enough business from search, browsers, and work applications that Google can't outlast them by being able to soak up the R&D of less successful model launches in the future.

The wild card here is GCP. The same way that AWS and Azure essentially became the most important business lines within their companies, overtaking the "original golden goose," so too might the fate of Alphabet very well depend on GCP becoming the most important revenue driver.

Source: RepVue

The tech sales opportunity on either side of the bet is worth it.