The Tech Sales Newsletter #105: The OpenAI play

It's been an intense week in tech when it comes to AI. The top three model makers (OpenAI, Anthropic and Google DeepMind) all released new models, with GPT-5 overshadowing everything else.

The model marks a significant shift in the market from an efficiency and monetization perspective, while mostly trading blows in terms of performance with the rest of the pack.

Due to the systemic dependence across the tech ecosystem on OpenAI models, the release of a new LLM from the company has become a massive event, drawing attention and controversies across the board. Many known names on the market today depend on OpenAI's models to power experiences and novel applications that have become the centerpiece of their business.

As such, intelligence benchmarks alone do not tell the full story. Let's dig in.

The key takeaway

For tech sales: If you are selling for an OpenAI wrapper, this week did not inspire a lot of confidence in your product benefiting from a tailwind of intelligence. If you are selling for OpenAI, the company is actually positioned quite well, with GPT-5 prioritising efficiency and open-source adding a new tool to attract investment from highly regulated customers.

For investors: Pure intelligence performance is not a moat until we see a frontier lab make a more significant jump and declare AGI. Distribution, efficiency and applications tailored for practical use cases is where the value is. As such, the thesis in this newsletter remains that most of the value will accrue on the bottom of the stack and the biggest beneficiary from the evolution of GPT models currently is Microsoft.

Free and open OpenAI

OpenAI made two major announcements this week. The first was their long awaited open-source model, while the second involved a comprehensive overhaul of both the ChatGPT user experience and its cost curve.

Let's begin with the open-source model.

Source: OpenAI

GPT-OSS comes in two versions—a 20B one and a 120B option. In practice, what that means is that "the big boi" is the primary model that businesses should aim to use, while the 20B offers a competent lightweight version that can be further trained for agentic workflows or providing a strong reasoning model on pretty much any device.

Performance sits close to o3 and o4 territory for most scenarios, which makes it competitive with the Chinese market leaders over the past 6 months—DeepSeek and Qwen.

This release, however, is not a "serious" attempt at winning open-source AI. The performance is adequate for mid-2025 and this will be a safer choice for most Western companies to deploy either due to budgeting or security restrictions. It's unlikely we will see big repeated revisions on a quick schedule, and unless Musk holds true to his promise to release each old version of Grok to the market (and Meta appearing to be deprioritizing open-source AI), it's likely that this will look very outdated in six months as DeepSeek R2 comes out.

New competitors in the West are interested in the space, however, as seen by Reflection AI (founded by ex-DeepMind researchers who worked on Gemini) who are currently raising $1B to offer an open-source model, together with their Enterprise-focused product.

Source: Reflection AI

Players like this are interesting (together with Ilya's and Mira's labs) because they represent a wildcard in the industry. GPT-OSS is not a wildcard. It's a play at hurting the unit economics of the other players for inference, while collecting brownie points.

Which brings us, of course, to the main release: GPT-5.

GPT-5

Source: Microsoft Build 2024 Keynote

GPT-5 was a massive release in all possible terms except raw performance.

More than 160,000 individuals watched the awkward keynote live, generating an almost Apple-like buzz around the event. The staggered release meant that not everybody would get access within the first 24 hours, and API customers across the board seemed to be the ones able to offer the model the fastest. The actual website serving the model also had an issue with the model router, which led to a lot of poor responses to early users who got connected with the lower versions for questions that required more intelligence.

What is THE ROUTER, you may ask? Well, OpenAI has been under a lot of criticism for the last 12 months as the direct-to-consumer offering bloated to way too many versions, all offering different capabilities. Prior to GPT-5, users could access 4o, o3, o3-mini, o3-pro, 4.5 and others, all through the same interface. That's before we talk about activating applications on top of it, such as Deep Research or Agent use. While the power users have learned to navigate this, for your average consumer this didn't make a lot of sense, leaving 4o as "the default" that the vast majority of users are familiar with.

4o is a tricky model. Less smart than the reasoning ones and highly deceptive. In the last month, there is a whole conspiracy theory that emerged around "LLM psychosis" and being "one-shotted by 4o," due to its compliant nature in essentially roleplaying back whatever the user throws at it. For a company that currently has found its strongest foundation around consumer AI, this had to change.

GPT-5 represents OpenAI's course correction on multiple fronts.

Source: OpenAI

Hidden behind GPT-5 are differently scaled versions of the model that are routed on the spot based on the complexity of the ask. The way that they describe the different models in the system card:

Source: OpenAI

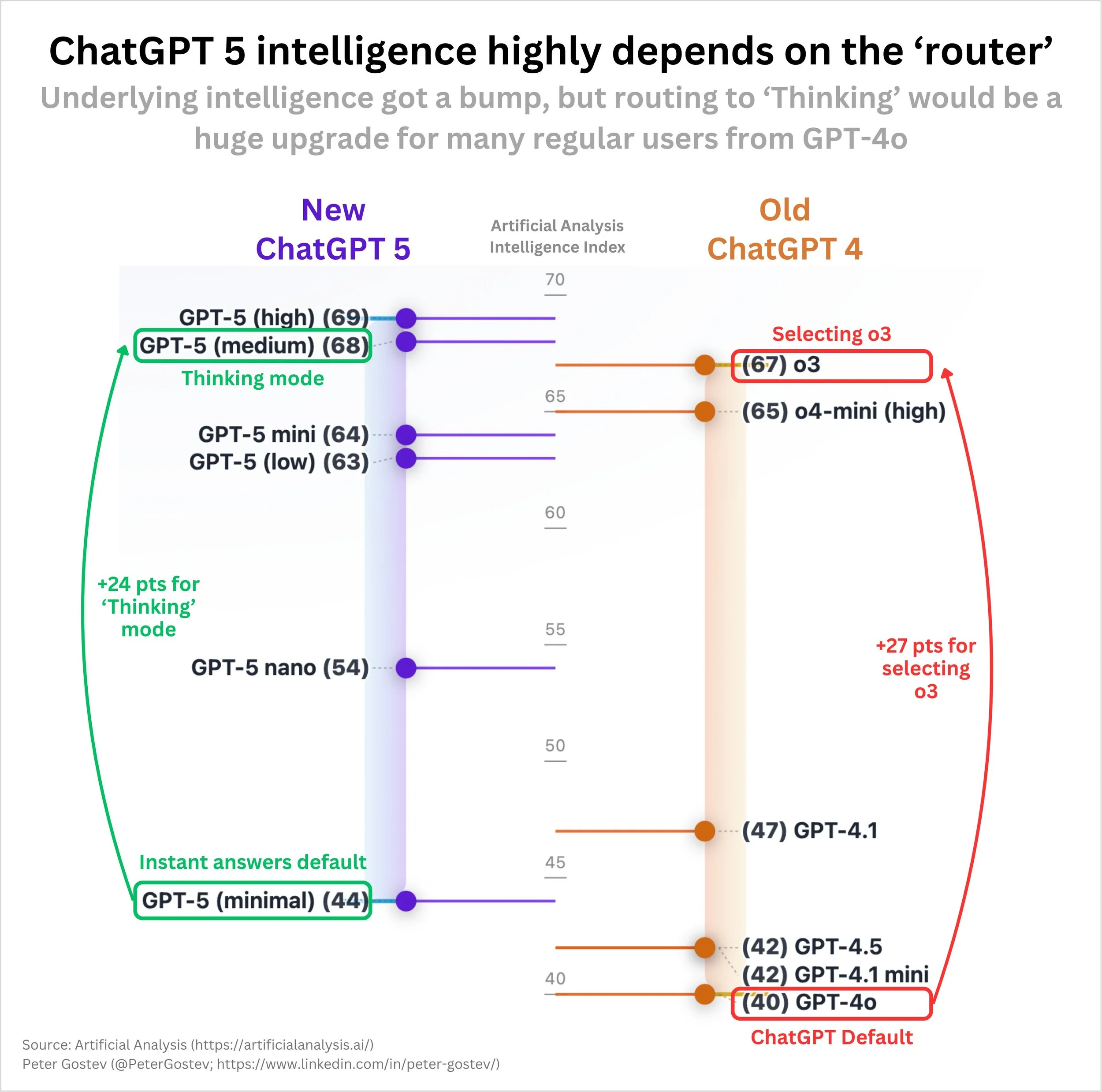

This creates confusion because OpenAI distinguishes between compute allocation for reasoning versus standard model inference. From an ML professional's benchmarking perspective:

Source: Peter Gostev

Unifying all models under a single router makes the front-end interaction with ChatGPT significantly easier from a user experience perspective. Whether the intelligence most users will interact with will be "as good" is a completely different story. Due to the inconsistent rollout and the "personality" change that removed most of the manipulative and deceptive behavior, the initial user sentiment on the consumer side has been quite negative, leading to OpenAI even bringing out 4o as an option under a legacy menu for paid users.

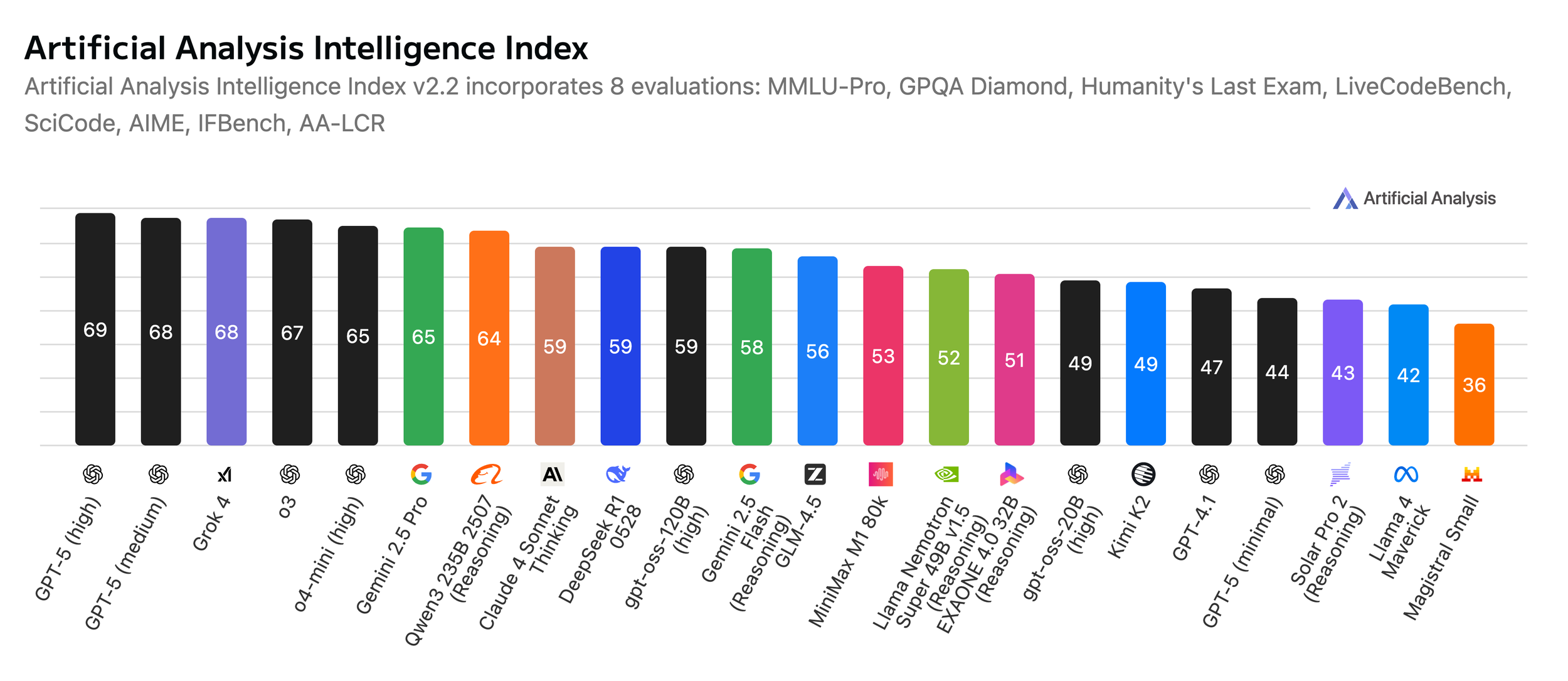

Source: Artificial Analysis

If we look at the extensive testing done by third parties, the current frontier models tend to perform on similar levels when stack-ranked across a variety of benchmarks. GPT-5's high-compute reasoning is the best model in the world, sure, but at least on raw performance it's difficult to call this a leap.

Source: Artificial Analysis

Price per token is an irrelevant metric if you don't understand the quantity of tokens it requires to get to an outcome. If we pick up the performance benchmarking and then quantify the costs to get there, things take a rapid turn.

GPT-5 Thinking is the best model in the world, while costing significantly less to run than Grok and Gemini. Anthropic's Sonnet is cheaper but also significantly worse in terms of raw performance. Claude Opus should benchmark closer, but it's not even on the chart, partly because it's the most expensive model to run today. Note also the open-source play with GPT-OSS 120B performing at similar levels to DeepSeek, while costing 94% less.

This is a strong warning shot across the industry. The primary reason for achieving similar performance to other models at much lower token generation is likely related to GPT-5 being a lot more compliant in following direction and feedback from users, while significantly reducing the deceptive behavior seen with 4o. To put it more simply, when used with reasoning it does what you tell it to do and it does it well, with less fluffy language.

The big picture

This is a rocky moment for OpenAI. GPT-5 was in development for close to 2 years, with many of the key researchers leaving during this period. The company captured an incredible level of demand across both consumer and enterprise applications (driven by Azure's incredible team).

Source: The Information

It's difficult to get a clear understanding of what drives this revenue growth. The total users of ChatGPT are 700 million, the vast majority obviously not monetized until ads become part of the application. The OpenAI CFO said this week that they have 5 million business seats, which when accounting for discounts is likely around $1B of revenue. The bulk of revenue then essentially comes from paid consumers and API usage plus the now-starting consulting business.

Typically we would also analyze feedback from the field, but OpenAI operates with extreme NDAs. The majority of employees do not have any titles that can identify their core function (but it can be inferred based on historical roles) and there is almost no usage of public platforms like RepVue to leave reviews or insights.

The relationship between Microsoft and OpenAI remains one of the trickiest challenges to navigate. The terms and conditions of their agreement were a big win for OpenAI back in 2019, essentially getting a preferred-partner lane with access to massive amounts of compute and the distribution power of Azure. In return, they would owe 20% of their revenue to Microsoft until 2030 (or if AGI is announced first), while also giving all of their IP for free to Microsoft to license and distribute.

Today, this deal looks very rough. Sam Altman is trying to pivot away from it, by both turning OpenAI into a commercial organization rather than a non-profit, while flirting with "announcing AGI as achieved." He has also spent significant amounts of time cutting deals with a variety of other players, Oracle and SoftBank being the most notable.

Source: Crunchbase

The negotiation with Microsoft has already led to delays on certain plans by OpenAI, such as the acquisition of Windsurf. They ended up backing out of the deal because the IP for Windsurf would end up being also owned by Microsoft, who literally develops VS Code, the editor that Cursor and Windsurf were forked from.

Source: The Information

It's clear that GPT-5 is an attempt to put efficiency and outcome-based pricing at the forefront of the product. This is needed both because it improves their hand in case things get more contentious with Microsoft, but also because it should give them a little more breathing room when 20% of that revenue is going to Microsoft, essentially killing any chance of a positive margin.

On the product side they need to achieve 3 outcomes:

Towards (pro)consumers: Improve average revenue per customer and reduce the cost of subsidizing free usage. The best way to achieve this is to minimize unproductive usage (essentially the companion aspect of 4o usage), try and push as many queries as possible to lower compute configurations without significant penalties on retention, and push paying users into the Pro tier. GPT-5 is clearly aimed in this direction, particularly due to significantly reducing the Plus subscription benefits. Both the amount of messages and context window on Plus have been reduced to the point where it's no longer useful as a primary subscription for heavy users. When you also account that the best performance is with GPT-5 Pro, the need for the highest subscription is obvious.

Towards developers: Developers predominantly need to use the API and will do so through application layers with quality of life improvements. There is a reason why the Cursor team was positioned quite heavily in the presentation. The problem here is that Claude Code appears to be strongly preferred as a primary tool by developers for agentic workflows (the most token-consuming ones). Hence what looks like a joint play with Cursor to offer GPT-5 as the best model for the newly launched Cursor CLI agent (free usage the first days of the launch). Whether this will be a successful strategy is yet to be seen, but Anthropic is on pace to reach 40% of OpenAI's revenue this year thanks to dominating with developers and this use case is too important to play catch-up.

Towards businesses/Enterprise: This is a highly awkward product currently. Revenue in the range of $1B is negligible at their size, and Microsoft offers essentially an equivalent product in terms of average outcomes through Copilot for Business. The most confusing part is that Enterprise usage doesn't include the Pro model and the increased limits on Deep Research, both of which are the essential killer apps for pro users. Google pulled a similar confusing feat by launching an Ultra plan that's not available for businesses and includes their Deep Thinking mode, which is highly competitive. If GPT-5 is meant to improve outcomes in this direction, it's difficult to see how.

More importantly, for OpenAI to "escape the Microsoft death grip" and establish itself as the most valuable company long-term in tech, it needs to win in all 3 directions. The potential for this lies with launching their own browser and productivity applications. I spoke about this last week when discussing Google, who are arguably the biggest competitor today to OpenAI.

This is particularly important due to the business realities of models:

1. Research lab trains a model and spends Y dollars on it last year.

2. This year the product and sales teams monetize the model across the estate for hopefully Y + profit.

3. In parallel, the research lab is training a new model this year, which likely cost 2x or 3x Y.

4. Next year, the current model will be retired (completing its revenue added-value) and the new model needs to monetize at least its training cost + profit.

Where research labs need to get to in order to be profitable is a situation where they can monetize models for longer before they need to spend massive R&D costs on the next model. Most importantly, if they do spend a lot of R&D, then the updated model also needs to be better in order to recoup the investment.

The reason why Google is the best positioned company in this little game of hot potato is because they can productize new models across a much bigger surface and drive revenue from the model.

The reason why OpenAI is going so hard directly against them is because their best bet is to essentially outplay Google on its own turf, potentially capturing enough business from search, browsers, and work applications that Google can't outlast them by being able to soak up the R&D of less successful model launches in the future.

Source: Polymarket

While Elon is likely going to have the best model on paper with Grok 5, the product still doesn't feel integrated enough to become a day-to-day replacement in a variety of use cases where the other frontier labs excel.

Anthropic's crown in code will last until somebody else delivers an agent that behaves at least on the same level but for significantly fewer tokens.

So for OpenAI to survive and thrive, they have to beat Google on their own turf, aggressively taking over search and productivity applications. In that context, GPT-5 was a defensive, not an offensive move.