The Tech Sales Newsletter #66: Q3’24 in cloud infrastructure software

Every quarter I do a deep dive on the latest earnings calls from the 3 cloud hyperscalers (AWS/Azure/GCP). While quarterly reviews are not necessary for most companies, in the context of cloud infrastructure software they are critical.

The majority of value accrues at the bottom of the cloud infra stack and as all 3 hyperscalers handle significant amounts of software through their marketplaces, this is the best place to get a strong understanding of how the industry is doing.

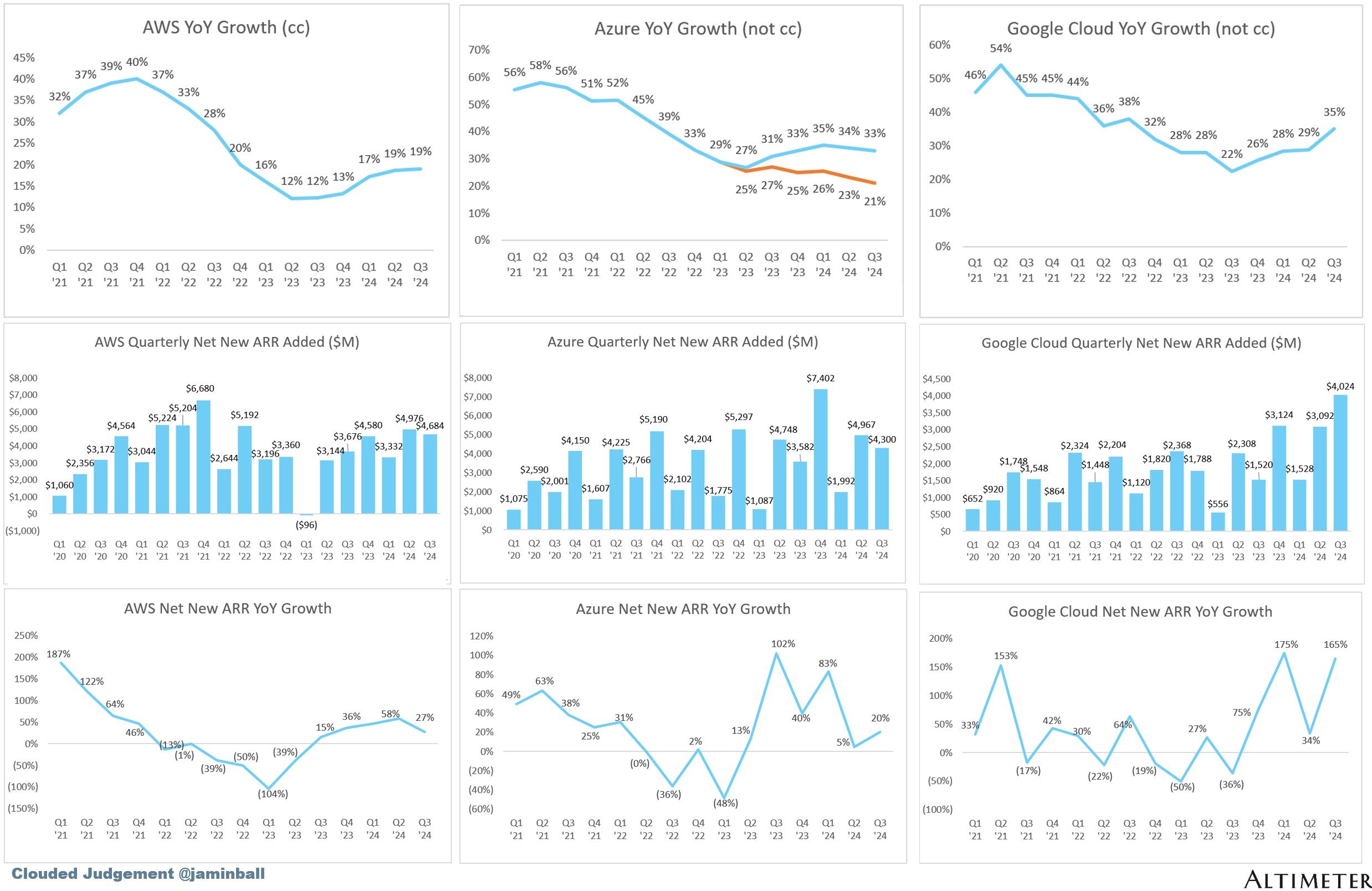

Source: Cloud Judgement

The key takeaway

For tech sales: While the software market has had a slow first half, in Q3 we are seeing an acceleration of cloud adoption, particularly when it comes to GenAI workloads. AWS and GCP continue to go through internal changes as they try to improve productivity; hiring is either stagnant or they are reducing headcount via PIPs. Azure remains the best place right now to sell AI workloads, as they are close to reaching $10 billion ARR in AI-related workloads.

For investors: Not all AI-related revenue is equal. The big takeaway from this round of earnings calls was that Microsoft is significantly outperforming the rest of the pack not only in terms of sheer AI revenue, but also by generating it from inference-only. This is what enterprise adoption at scale looks like, compared to the situation at AWS and GCP where they remain 12 to 18 months behind in terms of execution, if not per se technology.

GCP

Sundar Pichai: It has three components. First, a robust AI infrastructure that includes data centers, chips and a global fiber network. Second, world-class research teams who are advancing our work with deep technical AI research and who are also building the models that power our efforts. And third, a broad global reach through products and platforms that touch billions of people and customers around the world, creating a virtuous cycle.

Let me quickly touch on each of these. We continue to invest in state-of-the-art infrastructure to support our AI efforts from the U.S. to Thailand to Uruguay. We are also making bold clean energy investments, including the world's first corporate agreement to purchase nuclear energy from multiple small modular reactors, which will enable up to 500 megawatts of new 24/7 carbon-free power. We are also doing important work inside our data centers to drive efficiencies while making significant hardware and model improvements.

This earnings call is particularly interesting in the context that GCP delivered great results, and there was meaningful progress on several key elements critical to Alphabet as an organization in the long term. Arguably, the biggest news, besides the tech sales performance, remains the boutique deals the company is signing to secure energy capacity, which will allow them to operate their datacenters at full capacity.

Sundar Pichai: Today, more than a quarter of all new code at Google is generated by AI, then reviewed and accepted by engineers. This helps our engineers do more and move faster. I'm energized by our progress and the opportunities ahead, and we continue to be laser-focused on building great products.

This caught a lot of attention in the news, which was likely the intention. In practice, the vast majority of this “25% of all code” is automation-related (i.e., a developer starts writing a function, and the system proposes how to finish it). This led to a lot of “ugh, actually!!!!” reactions from the resident AI bears, but it misses the main point—the productivity benefits of LLMs are now becoming the new normal in large organizations.

Sundar Pichai: Next, Google Cloud. I'm very pleased with our growth.

This business has real momentum and the overall opportunity is increasing as customers embrace GenAI. We generated Q3 revenues of $11.4 billion, up 35% over last year with operating margins of 17%. Our technology leadership and AI portfolio are helping us attract new customers, win larger deals and drive 30% deeper product adoption with existing customers. Customers are using our products in five different ways.

First quarter in a while that GCP actually outperformed in terms of YoY growth the Azure team (even if at a smaller install base).

Sundar Pichai: First, our AI infrastructure, which we differentiate with leading performance driven by storage, compute and software advances, as well as leading reliability and a leading number of accelerators. Using a combination of our TPUs and GPUs, LG AI research reduced inference processing time for its multimodal model by more than 50% and operating costs by 72%. Second, our enterprise AI platform, Vertex, is used to build and customize the best foundation models from Google and the industry. Gemini API calls have grown nearly 40x in a 6-month period.

When Snap was looking to power more innovative experiences within their My AI chatbot, they chose Gemini's strong multimodal capabilities. Since then, Snap saw over 2.5 times as much engagement with My AI in the United States. Third, customers use our AI platform together with our data platform, BigQuery, because we analyze multimodal data no matter where it is stored with ultra-low latency access to Gemini. This enables accurate real-time decision-making for customers like Hiscox, one of the flagship syndicates in Lloyd's of London, which reduced the time it took to code complex risks from days to minutes.

These types of customer outcomes, which combine AI with data science have led to 80% growth in BigQuery ML operations over a 6-month period. Fourth, our AI-powered cybersecurity solutions, Google Threat Intelligence and Security operations are helping customers like BBVA and Deloitte prevent, detect and respond to cybersecurity threats much faster. We have seen customer adoption of our Mandiant-powered threat detection increased 4x over the last 6 quarters. Fifth, in Q3, we broadened our applications portfolio with the introduction of our new customer engagement suite.

It's designed to improve the customer experience online and in mobile apps, as well as in call centers, retail stores and more. A great example is Volkswagen of America, who is using this technology to power its new myVW Virtual Assistant. In addition, the employee agents we delivered through Gemini for Google Workspace are getting superb reviews. 75% of daily users say it improves the quality of their work.

Before we discuss these individually, let’s calibrate with an insider view:

Source: RepVue

It’s clear that we are seeing a step up in sales execution, with a deeper understanding of how to drive AI-related consumption across the portfolio. If you are not familiar with why this is important in relation to Vertex:

BigQuery serves as a scalable data warehouse that can directly feed data into Vertex AI's machine learning pipelines, enabling data preprocessing and feature engineering through SQL before model training. The integration eliminates the need for separate data transfer systems, as Vertex AI can read directly from BigQuery tables for both training and inference, making the workflow simpler and more efficient.

This is why I consider AI growth as critical for cloud infrastructure software - there is a significant flywheel effect across the whole segment. BigQuery was already one of the key flagship enterprise products for GCP and the fact that it’s getting the spotlight in this earnings call, indicates that it’s getting close to the revenue of pure data warehouse players like Snowflake.

An important shift in the tone here is also the big focus on explaining the improved customer outcomes. This has been a noticeable weakness in previous earnings calls for GCP and the new addition of highlighting new use cases is helpful in understanding what they see as their most high-profile implementations.

Phillip also highlighted in detail their own usage of AI for their flagship products:

Philipp Schindler: Let me share two new ad experiences we have rolled out alongside our popular AI-powered features in search. First, as you heard from Sundar, every month, Lens is used for almost 20 billion visual searches with 1 in 4 of these searches having commercial intent. In early October, we announced product search on Google Lens. And in testing this feature, we found that shoppers are more likely to engage with content in this new format.

We're also seeing that people are turning to Lens more often to run complexmultimodal queries, voicing a question or inputting text in addition to a visual. Given these new user behaviors, earlier this month, we announced the rollout of shopping ads above and alongside relevant Lens visual search results to help better connect consumers and businesses. Second, AI Overviews, where we have now started showing search and shopping ads within the overview for mobile users in the U.S. As you remember, we've already been running ads above and below AI Overviews. We're now seeing that people find ads directly within AI Overviews helpful because they can quickly connect with relevant businesses, products and services to take the next step at the exact moment they need.

As I've said before, we believe AI will revolutionize every part of the marketing value chain. Let's start with creative. Advertisers now use our Gemini-powered tools to build and test a larger variety of relevant creatives at scale. Audi used our AI tools to generate multiple video image and text assets in different links and orientations out of existing long-form videos. It then fed the newly generated creatives into demand gen to drive reach, traffic and booking to their driving experience.

The campaign increased website visits by 80% and increased clicks by 2.7 times, delivering a lift in their sales. Last week, we updated image generation at Google Ads with our most advanced text-to-image model, Imagine 3, which we tuned using ads performance data from multiple industries to help customers produce high-quality imagery for their campaigns. Advertisers can now create even higher-performing assets for PMax, Demand Gen app and Display campaigns. Turning to media buying.

AI-powered campaigns help advertisers get faster feedback on what creatives work, where and redirect their media buying. Using Demand Gen, DoorDash tested a mix of image and video assets to drive more impact across Google and YouTube's visually immersive surfaces. They saw a 15 times higher conversion rate at a 50% more efficient cost per action when compared to video action campaigns alone.

There is a whole different conversation to be had around whether Google has not reached it’s “peak ad business” moment and users are consistently leaving towards alternatives due to the poor performance of Google Search. I have reservations about stuffing heavy advertisement in what is essentially the future of Google Search before even there is a wide adoption.

Nonetheless, if we focus purely on the tech aspect of it, MarTech is about to change further, with Google clearly stepping in the content generation and management part of the workflow, while already controlling a significant portion of the digital ad market. There are significant questions around who will provide the computing capcity to other incumbents in the space in order for them to compete.

Ross Sandler: So first, Sundar, given the high stakes around native AI product usage, are there any milestones you can share around where Gemini usage is compared to the 250 million weekly active users that ChatGPT is seeing right now? And then the second question is, I'm sure thisis something you guys have been thinking about for a while, but it looks like the way that the Google versus DOJ search trial is going, there's a decent likelihood that the Apple ISA contract and some of the Android pre-install contracts are going to be voided out at some point in the future.

So I guess the question is, what plans do we have in place to recapture some of the usage that might be going away in those search access points? How can we gain share on iOS queries if the Safari toolbar access point were to change? Thank you very much.

Sundar Pichai: Thanks, Ross. Look, I think, you know, obviously, we are serving Gemini across a lot of touch points, including it's now over 1 billion people are using it in search accessing. We are getting it across our products. The Gemini app itself has very strong momentum on user growth. Our API volume, I commented on the Gemini APIs having gone up 14x in the past 6 months. So we are seeing growth across the board.

Sundar Pichai: And the Gemini integration into Google Assistant is going super well on Android. The user feedback is positive. So we are continuing to roll that out more. So I think we'll - you will see us we are investing in the next generation of models. And as part of that, we are investing in scaling up the usage of the - both directly to the models as both on the consumer and the developer side. So I think I'm pleased with the momentum there.

The call ended with a lacklustre defence of the massive gap between ChatGPT adoption vs Gemini. Rather than trying to explain what market they currently have, Sundar tried to reframe it around API calls (ugh, enterprise adoption!!) and individuals using their Google Assistant (which doesn’t generate revenue directly). There is a lot riding on the new Gemini launch expected later in the year - GCP can benefit from big headlines on how the model is now better than Claude and ChatGPT.

AWS

Andy Jassy: AWS grew 19.1% year-over-year and now stands at a $110 billion annualized run rate. We've seen significant reacceleration of AWS growth for the last four quarters. With the broadest functionality, the strongest security and operational performance and the deepest partner community, AWS continues to be a customer's partner of choice.

There are signs of this in every part of AWS's business. We see more enterprises growing their footprint in the cloud, evidenced in part by recent customer deals with the ANZ Banking Group, Booking.com, Capital One, Fast Retailing, Itaú Unibanco, National Australia Bank, Sony, T-Mobile, and Toyota. You can look at our partnership with NVIDIA called Project Ceiba, where NVIDIA has chosen AWS's infrastructure for its R&D supercomputer due in part to AWS's leading operational performance and security. And you can see how AWS continues to innovate in its infrastructure capabilities.

At the size that AWS operates, their 19% YoY growth versus last year is impressive (when it reached the bottom of the workload optimization with 12% YoY versus Q3 '23). To put into a more practical perspective, they generated $4.7B in net new ARR versus $4.3B for Azure and $4B for GCP, i.e., they are outperforming the other vendors in winning new workloads over the whole of 2024 so far.

Andy Jassy: With deliveries like Aurora Limitless Database, which extends AWS's very successful relational database to support millions of database writes per second and manage petabytes of data whilst maintaining the simplicity of operating a single database or with our custom Graviton4 CPU instances, which provide up to nearly 40% better price performance versus other leading x86 processors. Companies are focused on new efforts again, spending energy on modernizing their infrastructure from on-premises to the cloud. This modernization enables companies to save money, innovate more quickly, and get more productivity from their scarce engineering resources. However, it also allows them to organize their data in the right architecture and environment to do Generative AI at scale.

Similar to the GCP humblebrag about BigQuery, Andy positioned very early in the AWS story the renewed focus on their own database product. Growth metrics were lacking, however, which makes it difficult to understand whether customers are picking it over the products offered by ISVs.

Andy Jassy: It's much harder to be successful and competitive in Generative AI if your data is not in the cloud. The AWS team continues to make rapid progress in delivering AI capabilities for customers in building a substantial AI business. In the last 18 months, AWS has releasednearly twice as many machine learning and GenAI features as the other leading cloud providers combined. AWS's AI business is a multibillion-dollar revenue run rate business that continues to grow at a triple-digit year-over-year percentage and is growing more than 3 times faster at this stage of its evolution as AWS itself grew, and we felt like AWS grew pretty quickly.

While the "triple digit" statement sounds exciting, their 2023 performance in GenAI upsells was subpar, so it's not exactly something to get excited about yet.

Andy Jassy: We talk about our AI offering as three macro layers of the stack, with each layer being a giant opportunity and each is progressing rapidly. At the bottom layer, which is for model builders, we were the first major cloud provider to offer NVIDIA's H200 GPUs through our EC2 P5e instances. And thanks to our networking innovations like Elastic Fabric adapter and Nitro, we continue to offer advantaged networking performance. And while we have a deep partnership with NVIDIA, we've also heard from customers that they want better price performance on their AI workloads.

As customers approach higher scale in their implementations, they realize quickly that AI can get costly. It's why we've invested in our own custom silicon in Trainium for training and Inferentia for inference. The second version of Trainium, Trainium2 is starting to ramp up in the next few weeks and will be very compelling for customers on price performance. We're seeing significant interest in these chips, and we've gone back to our manufacturing partners multiple times to produce much more than we'd originally planned.

Trainium doesn't run on CUDA and is a very niche product for companies with deep ML expertise which are willing to put in the R&D effort to adapt their workflow in order to save on training and inference cost. Customers include Databricks and Anthropic.

Andy Jassy: We also continue to see increasingly more model builders standardize an Amazon SageMaker, our service that makes it much easier to manage your AI data, build models, experiment, and deploy to production. This team continues to add features at a rapid clip punctuated by SageMaker's unique hyperpod capability, which automatically splits training workloads across more than 1,000 AI accelerators, prevents interruptions by periodically saving checkpoints, and automatically repairing faulty instances from their last saved checkpoint and saving training time by up to 40%. At the middle layer where teams want toleverage an existing foundation model customized with their data and then have features to deploy high-quality Generative AI applications, Amazon Bedrock has the broadest selection of leading foundation models and most compelling modules for key capabilities like model valuation, guardrails, rag and agents. Recently, we've added Anthropic's Claude 3.5 Sonnet model, Meta's Llama 3.2 models, Mistral's Large 2 models and multiple stability AI models.

We also continue to see teams use multiple model types from different model providers and multiple model sizes in the same application. There's mucking orchestration required to make this happen. And part of what makes Bedrock so appealing to customers and why it has so much traction is that Bedrock makes this much easier. Customers have many other requests, access to even more models, making prompt management easier, further optimizing inference costs, and our Bedrock team is hard at work making this happen.

The "LLM as a service" workflow for Bedrock remains the strongest play that AWS has right now. It's clear that there is significant investment going into expanding functionality, and long-term, it is likely to be the "default" choice for many companies if they continue to prioritize the flexibility to build custom applications powered by multiple models with different performance vs. cost ratios. GCP is prioritizing agents as their strong pitch for Vertex, but there is an argument to be made that the highest spenders long-term will demand the most flexibility in deployment choices.

Brian Olsavsky: Okay. Thanks, Doug. Let me start with AWS. Yeah, the primary drivers of the year-over-year margin increase are threefold. So first is accelerating top-line demand. That helps with all of our efficiencies, our cost-control efforts and of course, what you just mentioned, I believe, the change in the useful life of our servers this year. Let me remind you on that one. We made the change in 2024 to extend the useful life of our servers.

This added about 200 basis-points of margin year-over-year. On cost-control, it's been a number of areas. It's -- first is hiring and staffing. We're being very measured in our hiring and you can tell as a company where our office staff is down slightly year-over-year and it's flat to the end of last year. So we're really working hard to maintain our efficiencies not only in the sales force and other areas and production teams, but also in our infrastructure areas.

"We are measured in hiring" is the new "your margin is my opportunity". Tech sales opportunities at AWS will remain flat, while quotas continue to go up. If you want to get hired, somebody has to leave.

Andy Jassy: Yeah. So some quick context, Ross. I think one of the least understood parts about AWS, over time, is that it is a massive logistics challenge. If you think about, we have 35 or so regions around the world, which is an area of the world where we have multiple data centers, and then probably about 130 availability zone through data centers, and then we have thousands of SKUs we have to land in all those facilities.

And if you land too little of them, you end up with shortages, which end up in outages for customers. So most don't end up with too little, they end up with too much. And if you end up with too much, the economics are woefully inefficient. And I think you can see from our economics that we've done a pretty good job over time at managing those types of logistics and capacity. And it's meant that we've had to develop very sophisticated models in anticipating how much capacity we need, where, in which SKUs and units.

And so I think that the AI space is, for sure, earlier stage, more fluid and dynamic than our non-AI part of AWS. But it's also true that people aren't showing up for 30,000 chips in a day. They're planning in advance. So we have very significant demand signals giving us an idea about how much we need. And I think that one of the differences if you were able to get inside of the economics of the different types of providers here is how well they manage that utilization and that capacity.

I still find it entertaining that Andy has to explain every single earnings call to the analysts that doing capacity planning at their scale is literally one of the most impressive technical achievements in the world, yet everybody remains very confused about it. The argument made here is also fundamentally the reasoning behind consumption as a sales model - if you want to scale a customer long-term, you need to prove the value but also deliver the outcome in both software and hardware.

Provisioning too much capacity upfront means you'll lose a lot of money, not delivering the hardware when it's needed means you'll not win the workload. The middle ground is the customer ending up using an alternative (more expensive) instance which then requires additional discounts and concessions to make it acceptable.

Andy Jassy: And I think if you look at what's happened in Generative AI over the last couple of years, I think you're kind of missing the boat if you don't believe that's going to happen. It absolutely is going to happen. So we have a really broad footprint where we believe if we rearchitect the brains of Alexa with next-generation foundational models, which we're in the process of doing, we have an opportunity to be the leader in that space. And I think if you look at a lot of the applications today that use Generative AI, there's a large number of them that are having success in cost avoidance and productivity.

And then you're increasingly seeing more applications have success in really impacting the customer experience and being really good at taking large corpuses of data and being able to summarize and aggregate and answer questions, but not that many yet that are really good on top of that in taking actions for customers. And I think that the next generation of these assistants and the Generative AI applications will be better at not just answering questions and summarizing the indexing and aggregating data, but also taking actions. And you can imagine us being pretty good at that with Alexa.

If Sundar used Google Assistance as with his opportunity to scale Gemini as a competitor to ChatGPT, then Andy went even further, claiming that his team is working on a next-generation model that can power Alexa and make it the dominant voice assistant product on the market.

Interesting times, keeping in mind the recently debuted voice mode for ChatGPT. Due to the inference cost required, they are planning to introduce a paid Alexa plan in the $5-$10 monthly range, which would be interesting to see, keeping in mind that ChatGPT is expected to go up in cost towards the $50 range per month over time.

Andy Jassy: Our orientation, our DNA and our core at Amazon starts with the customer, and everything moves backwards from that. Any meeting you go to inside of Amazon, we're always asking ourselves what do customers want? What do customers say? What do they not like about the experience? What could be better? And that customer orientation is very important, not just in how you take care of customers, but the world changes quickly all the time.

And the technology is changing really quickly. And so if you have the combination of strong technical aptitude, propensity and a passion for inventing and then also a customer orientation where it drives everything you do, I think you have an opportunity to continue to build great trust in a business over a long period of time.

The challenge of changing the tech sales culture at AWS in one paragraph:

Customer obsession is great, but to achieve outstanding growth, you also need to offer customers thought leadership and guide them into what "good" looks like, rather than just reactively responding to their needs.

Azure

Satya Nadella: Thank you, Brett. We are off to a solid start to our fiscal year, driven by continued strength of Microsoft Cloud, which surpassed $38.9 billion in revenue, up 22%. AI-driven transformation is changing work, work artifacts and workflow across every role, function, and business process, helping customers drive new growth and operating leverage. All up, our AI business is on track to surpass an annual revenue run rate of $10 billion next quarter, which will make it the fastest business in our history to reach this milestone.

$10 billion in ARR by the end of this calendar year is an impressive goal for AWS. Meanwhile, the tech sales bears are complaining that "Microsoft's investment in AI is not paying off", seemingly overlooking the significant strides AWS is making in this space.

Satya Nadella: Now I'll highlight examples of our progress starting with infrastructure. Azure took share this quarter. We are seeing continued growth in cloud migration. Azure Arc now has over 39,000 customers across every industry, including American Tower, CTT, L'Oréal, up more than 80% year-over-year. We now have data centers in over 60 regions around the world. And this quarter, we announced new cloud and AI infrastructure investments in Brazil, Italy, Mexico, and Sweden as we expand our capacity in line with our long-term demand signals.

At the silicon layer, our new Cobalt 100 VMs are being used by companies like Databricks, Elastic, Siemens, Snowflake, and Synopsys to power their general-purpose workloads at up to 50% better price performance than previous generations. On top of this, we are building out our next-generation AI infrastructure, innovating across the full stack to optimize our fleet for AI workloads. We offer the broadest selection of AI accelerators, including our first-party accelerator, Maia 100 as well as the latest GPUs from AMD and NVIDIA.

Something that a lot of outsiders don't understand about the power of consumption models is the sheer flexibility that they can offer. Not being locked into specific SKUs forever means that customers can jump into new products and get improved ROI without having to go through a lengthy process of signing up for a new or modified contract.

Satya Nadella: Our partnership with OpenAI also continues to deliver results. We have an economic interest in a company that has grown significantly in value, and we have built differentiated IP and are driving revenue momentum. More broadly with Azure AI, we are building an end-to-end app platform to help customers build their own Copilots and agents.

Azure OpenAI usage more than doubled over the past six months as both digital natives like Grammarly and Harvey as well as established enterprises like Bajaj Finance, Hitachi, KT, and LG move apps from test to production.

GE Aerospace, for example, used Azure OpenAI to build a new digital assistant for all 52,000 of its employees. In just three months, it has been used to conduct over 500,000 internal queries and process more than 200,000 documents. And this quarter, we added support for OpenAI's newest model family, o1. We're also bringing industry-specific models through Azure AI, including a collection of best-in-class multimodal models for medical imaging.

While the media has focused on "the big rift between OpenAI and Microsoft," the tech sales teams continue to drive consumption across their key accounts and move POCs into production.

Satya Nadella: And with the GitHub models, we now provide access to our full model catalog directly within the GitHub developer workflow. Azure AI is also increasingly an on-ramp to our data and analytics services. As developers build new AI apps on Azure, we have seen an acceleration of Azure Cosmos DB and Azure SQL DB hyperscale usage as customers like Air India, Novo Nordisk, Telefonica, Toyota Motors North America, and Uniper take advantage of capabilities, purpose-built for AI applications. And with Microsoft Fabric, we provide a single AI-powered platform to help customers like Chanel, EY, KPMG, Swiss Air, and Syndigo unify their data across clouds.

The database upsell shows up again, similar to GCP and AWS. At this stage, I think it's clear that the hyperscalers will not wait on the data platform ISVs to capture this market share - they are actively pushing their native products and aiming for upsells.

Satya Nadella: We now have over 16,000 paid Fabric customers, over 70% of the Fortune 500. Now on to developers. GitHub Copilot is changing the way the world builds software. Copilot enterprise customers increased 55% quarter-over-quarter as companies like AMD and Flutter Entertainment tailor Copilot to their own code base. And we are introducing the next phase of AI code generation, making GitHub Copilot agentic across the developer workflow. GitHub Copilot Workspace is a developer environment which leverages agents from start to finish so developers can go from spec to plan to code all in natural language.

Copilot Auto Fix is an AI agent that helps developers at companies like Asurion and Auto Group fix vulnerabilities in their code over 3x faster than it would take them on their own. We're also continuing to build on GitHub's open platform ethos by making more models available via GitHub Copilot. And we are expanding the reach of GitHub to a new segment of developers introducing GitHub Spark, which enables anyone to build apps in natural language.

Probably the most important part of the whole call, which of course went unnoticed by the general investor public:

GitHub Copilot was the first flagship enterprise use case and remains a key driver behind a lot of the momentum for AI adoption.

Growth continues to be explosive, with many more organizations signing up for paid usage.

Microsoft is pushing for the next big market share winner - text-to-application natively within GitHub.

Satya Nadella: Already, we have brought generative AI to Power Platform to help customers use low-code, no-code tools to cut costs and development time. To date, nearly 600,000 organizations have used AI-powered capabilities in Power Platform, up 4x year-over-year.

Citizen developers at ZF, for example, built apps simply by describing what they need using natural language. And this quarter, we introduced new ways for customers to apply AI to streamline complex workflows with Power Automate.

Low or no-code platforms were seen as the future of software adoption just 2 years ago. Today, this whole market is both rapidly expanding but also open for disruption by whoever can deliver the best text-to-application functionality. GitHub is clearly aiming for that market opportunity, and keeping in mind its existing footprint, it's very likely to dominate in it.

Satya Nadella: And we continue to see enterprise customers coming back to buy more seats. All up, nearly 70% of the Fortune 500 now use Microsoft 365 Copilot, and customers continue to adopt it at a faster rate than any other new Microsoft 365 suite. Copilot is the UI for AI and with Microsoft 365 Copilot, Copilot Studio and Agents and now Autonomous Agents, we have built an end-to-end system for AI business transformation. With Copilot Studio, organizations can build and connect Microsoft 365 Copilot to Autonomous Agents, which then delegate to Copilot when there is an exception.

More than 100,000 organizations from Ensure, Standard Bank, and Thomson Reuters, to Virgin Money and Zurich Insurance have used Copilot Studio to date, up over 2x quarter-over-quarter. More broadly, we are seeing AI drive a fundamental change in the business applications market as customers shift from legacy apps to AI-first business processes. Dynamics 365 continues to take share as organizations like Evron, Heineken, and Lexmark chose our apps over other providers. And monthly active users of Copilot across our CRM and ERP portfolio increased over 60% quarter-over-quarter.

Source: Salesforce marketing materials

It turns out that angry X posts by Marc and cutesy marketing advertisements are not as powerful as, well, world-class enterprise sales execution. Copilot and Microsoft agents are going nowhere.

Satya Nadella: And we continue to take what we learn and turn it into innovations across our products. Security Copilot, for example, is being used by companies in every industry, including Clifford Chance, Intesa Sanpaolo, and Shell to perform SecOps tasks faster and more accurately. And we are now helping customers protect their AI deployments too. Customers have used Defender or Discover and secured more than 750,000 gen AI app instances and used Purview to audit over one billion Copilot interactions to meet their compliance obligations.

The cybersecurity upsells remain strong, regardless of the poor perception of Microsoft from industry professionals.

Kash Rangan: Satya, when you talked about the investment cycle, these models are getting bigger, more expensive, but you also pointed out to how the inference phase were likely to get paid. How does that cycle look like an inference for Microsoft? Where are the products and the applications that will show up on the Microsoft P&L as a result of the inference rate of AI kicking in?

Satya Nadella: Thanks, Kash. I mean, the good news for us is that we're not waiting for that inference to show up, right? If you sort of think about the point, we even made that this is going to be the fastest growth to $10 billion of any business in our history, it's all inference, right? One of the things that may not be as evident is that we're not actually selling raw GPUs for other people to train. In fact, that's sort of a business we turn away because we have so much demand on inference that we are not taking what I would -- in fact, there's a huge adverse selection problem today where people -- it's just a bunch of tech companies still using VC money to buy a bunch of GPUs.

We kind of really are not even participating in most of that because we are literally going to the real demand, which is in the enterprise space or our own products like GitHub Copilot or M365 Copilot. So, I feel the quality of our revenue is also pretty superior in that context. And that's what gives us even the conviction, to even Amy's answers previously, about our capital spend is if this was just all about sort of a bunch of people training large models and that was all we got, then that would be ultimately still waiting, to your point, for someone to actually have demand which is real. And in our case, the good news here is we have a diversified portfolio.

The biggest jab out of the 3 calls, by a mile. The key to understanding how much Microsoft is leading the pack right now is tied to how their AI revenue is generated. They are using all of their capital to fund hardware that is used for inference in enterprise use cases. The majority of AWS and GCP AI revenue is coming from renting out their hardware to companies that are currently training their models but might or might not have a true market fit. The rest is internal usage. The fact that AWS still doesn't disclose their actual AI-related revenue figures, let alone the breakdown between type of usage, says it all.

Raimo Lenschow: If you talk about the market at the moment because you were first with Copilot, you had identified a lot with Copilots and now we're talking agents. Can you, kind of -- Satya, how do you think about that? And to me, it looks like an evolution that we're discovering how to kind of productize AI better, et cetera. So how do you think about that journey between Copilots, agents and maybe what's coming next?

Satya Nadella: Sure. The system we have built is Copilot, Copilot Studio, agents and autonomous agents. You should think of that as the spectrum of things, right? So ultimately, the way we think about how this all comes together is you need humans to be able to interface with AI. So, the UI layer for AI is Copilot. You can then use Copilot Studio to extend Copilot. For example, you want to connect it to your CRM system, to your office system, to your HR system.

You do that through Copilot Studio by building agents effectively. You also build autonomous agents. So, you can use even that's the announcement we made a couple of weeks ago is you can even use Copilot Studio to build autonomous agents. Now these autonomous agents are working independently, but from time to time, they need to raise an exception, right? So autonomous agents are not fully autonomous because at some point, they need to either notify someone or have someone input something.

And when they need to do that, they need a UI layer and that's where again, it's Copilot. So, Copilot, Copilot agents built-in Copilot Studio, autonomous agents built in Copilot Studio, that's the full system we think that comes together, and we feel very, very good about the position. And then, of course, we are taking the underlying system services across that entire stack that I just talked about, making it available in Azure, right? So, you have the raw infrastructure if you want it.

You have the model layer independent of it. You have the AI app server in Azure AI, right? So, everything is also a building block service in Azure for you to be able to build. In fact, if you want to build everything that we have built in the Copilot stack, you can build it yourself using the AI platform. So that's sort of, in simple terms, our strategy, and that's kind of how it all comes together.

The reason why the cloud hyperscalers (the bottom of the stack) are benefiting the most from this transformational phase in computing is well outlined above.

While ISVs such as Salesforce or Databricks can offer their own compelling implementations of agents or LLM-as-a-service implementations, at a fundamental level, the best place to design, implement and manage AI workflows will be natively within the hyperscaler ecosystem. Microsoft is doing its usual thing of having very confusing branding, but at a high level, what's outlined above is the 3 core application layer implementations:

AI applications (Copilot as a competitor to ChatGPT/Claude)

Regular applications powered by AI (Copilot features within Microsoft 365)

Custom applications for specific use cases (agents created and deployed via Copilot Studio)

So basically, underneath the Copilot umbrella, you have all 3 types of products, all focused on enterprise adoption, all being pitched relentlessly by Microsoft's reps. The rest, as they say, is a $10 billion ARR.