The Tech Sales Newsletter #80: Q4’24 in cloud infrastructure software

We are back to the regular deep dive into the hyperscaler (AWS/Azure/GCP) earnings calls as the guiding pulse of where the cloud infrastructure software market is going.

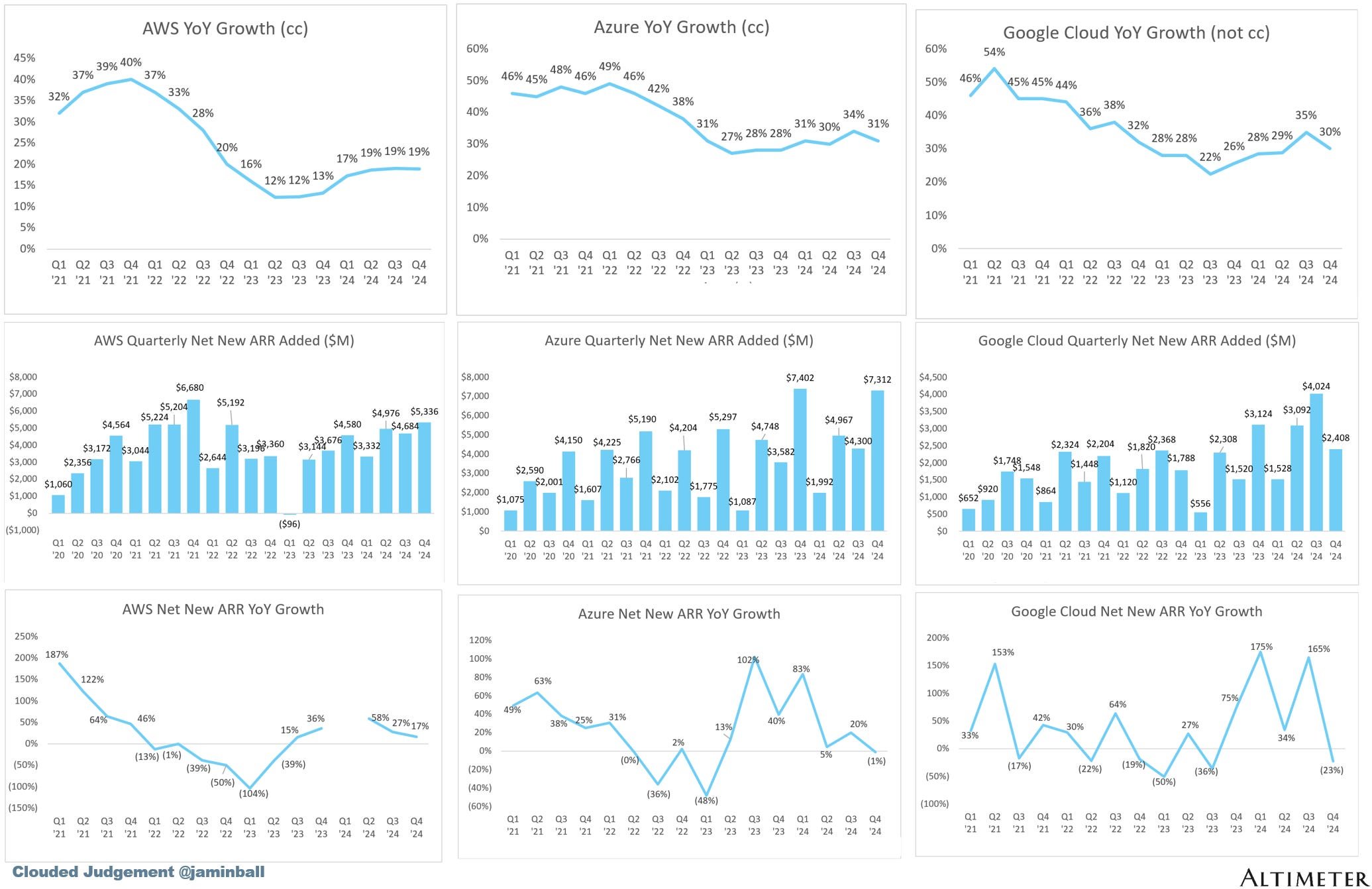

Source: Clouded Judgement

The key takeaway

For tech sales: The opportunity at the bottom of the stack remains significant in all 3 hyperscalers but for different reasons. While Azure remains the highest performing sales team and GCP is arguably the worst to work for, any tech sales rep should count themselves lucky to get into these organizations.

For investors: It's becoming clear that a lot of the metrics coming from the hyperscalers are a reflection of a "power constraint". While there are a lot of assurances being provided that "this should no longer be an issue towards end of 2025", I don't believe that this really accounts for the significant interest in reasoning models mixed with agentic workflows. Make your own conclusions on what happens if we actually get AGI-level of performance out of the next frontier models (which in theory we already have with o3-high).

Azure

Satya Nadella: Thank you, Brett. This quarter we saw continued strength in Microsoft Cloud, which surpassed $40 billion in revenue for the first time, up 21% year-over-year. Enterprises are beginning to move from proof-of-concepts to enterprise-wide deployments to unlock the full ROI of AI. And our AI business has now surpassed an annual revenue run rate of $13 billion up 175% year-over-year. Before I get into the details of the quarter, I want to comment on the core thesis behind our approach to how we manage our fleet and how we allocate our capital to compute.

AI scaling laws are continuing to compound across both pre training and inference time compute. We ourselves have been seeing significant efficiency gains in both training and inference for years now. On inference, we have typically seen more than 2x price performance gain for every hardware generation and more than 10x for every model generation due to software optimizations. And as AI becomes more efficient and accessible, we will see exponentially more demand.

Therefore, much as we have done with the Commercial Cloud, we are focused on continuously scaling our fleet globally and maintaining the right balance across training and inference as well as geo distribution. From now on it's a more continuous cycle governed by both revenue growth and capability growth, thanks to the compounding effects of software driven AI scaling laws and Moore's Law.

$13 billion ARR means that just the AI inference business of Microsoft has in 2 years surpassed the revenue of ServiceNow, arguably the most well-managed and "overperforming" on a number of metrics software company in the world.

Satya Nadella: We have more than doubled our overall data center capacity in the last three years and we have added more capacity last year than any other year in our history. Our data centers, networks, racks and silicon are all coming together as a complete system to drive new efficiencies to power both the cloud workloads of today and the next generation AI workloads.

We continue to take advantage of Moore's Law and refresh our fleet as evidenced by our support of the latest from AMD, Intel, NVIDIA as well as our first-party silicon innovation from. Maia, Cobalt, Boost and HSM. When it comes to cloud migrations, we continue to see customers like UBS move workloads to Azure.

UBS alone migrated mainframe workloads encompassing nearly 400 billion records and 2 petabytes of data. And we remain the cloud of choice for customers' mission-critical Oracle, SAP and VMware apps. At the data layer, we are seeing Microsoft Fabric breakout. We now have over 19,000 paid customers from Hitachi to Johnson Controls to Schaeffler. Fabric is now the fastest growing analytics product in our history.

The beneficiaries of this wave of adoption go well beyond just inference revenue. Every part of the stack is benefiting, including traditional workloads that have been difficult to win. Mainframe workloads are the “coal mines” of the data industry, you have to dig deep and hard to get anything out of them. It’s not a coincidence that these are mentioned in the opening statement for arguably speaking one of the most important earnings calls every quarter.

Satya Nadella: Beyond Fabric, we are seeing new AI-driven data patterns emerge. If you look underneath ChatGPT or Copilot or Enterprise AI apps, you see the growth of raw storage, database services and app platform services as these workloads scale. The number of Azure OpenAI apps running on Azure databases and Azure app services more than doubled year-over-year driving significant growth and adoption across SQL Hyperscale and Cosmos DB.

When I talk about the hyperscaler marketplaces as the new kingmakers in the industry, this is what I’m referring to. You need to be positioned within the ecosystem and you need to be an easy choice for developers to pick. Otherwise they’ll go with the “default” - which in this case is Microsoft first-party services.

Satya Nadella: All up GitHub now is home to 150 million developers up 50% over the past two years. Now on to the future of work. Microsoft 365 Copilot is the UI for AI. It helps supercharge employee productivity and provides access to a swarm of intelligent agents to streamline employee workflow.

We are seeing accelerated customer adoption across all deal sizes as we win new Microsoft 365 Copilot customers and see the majority of existing enterprise customers come back to purchase more seats. When you look at customers who purchased Copilot during the first quarter of availability, they have expanded their seats collectively by more than 10x over the past 18 months. To share just one example, Novartis has added thousands of seats each quarter over the past year and now have 40,000 seats. Barclays, Carrier Group, Pearson and University of Miami all purchased 10,000 or more seats this quarter.

And overall the number of people who use Copilot daily again more than doubled quarter-over-quarter. Employees are also engaging with Copilot more than ever. Usage intensity increased more than 60% quarter-over-quarter and we are expanding our TAM with Copilot Chat which was announced earlier this month. Copilot Chat along with Copilot Studio is now available to every employee to start using agents right in the flow of work.

While Benioff writes angry posts on X, Microsoft continues an aggressive upsell of both copilot seats and agents.

Satya Nadella: With Copilot Studio, we are making it as simple to build an agent as it is to create an Excel spreadsheet. More than 160,000 organizations have already used Copilot Studio and they collectively created more than 400,000 custom agents in the last three months alone up over 2x quarter-over-quarter. We've also introduced our own first-party agents to facilitate meetings, manage projects, resolve common HR and IT queries and access SharePoint data.

FOUR HUNDRED THOUSAND AGENTS ARE ON THE JOB!

Satya Nadella: Now on to our consumer businesses starting with LinkedIn. More professional than ever are engaging in high value conversations on LinkedIn with comments up 37% year-over-year. Short form video continues to grow on the platform with video creation all up growing at twice the rate of other post formats. We're also innovating with agents to help recruiters and small businesses find qualified candidates faster and our hiring business again took share.

LinkedIn engagement spam has accelerated, driving disillusioned users back to Copilot Studio to work on actual productive agents instead of reading bad comments.

Amy Hood: In Azure, we expect Q3 revenue growth to be between 31% and 32% in constant currency driven by strong demand for our portfolio of services. As we shared in October, the contribution from our AI services will grow from increased AI capacity coming online. In non-AI services healthy growth continues, although we expect ongoing impact through H2 as we work to address the execution challenges noted earlier. And while we expect to be AI capacity constrained in Q3, by the end of FY25 we should be roughly in line with near-term demand given our significant capital investments.

The most revealing discussion from the call was around the idea of how much the server capacity has increased, yet that is simply not enough to tackle demand. Interestingly enough some of those constraints might be related to fleet refresh pacing, but we will explore that a bit later.

Amy Hood: Let me spend a little time on that about what we saw in Q2 and give you some additional background on the near-term execution issues that we're talking about.

First, let me be very specific. They are in the non-AI ACR component. Our Azure AI results were better than we thought due to very good work by the operating teams pulling in some delivery dates even by weeks. When your capacity constrained weeks matter, and it was good execution by the team, and you see that in the revenue results.

On the non-AI side, really, the challenges were in what we call the scale motion. So think about primarily these are customers we reach through partners and through more indirect methods of selling. And really, the art form there is as these customers, which we reach in this way, are trying to balance how do you do an AI workload with continuing some of the work they've done on migrations and other fundamentals, we then took our sales motions in the summer and really change to try to balance those two. As you do that, you learn with your customers and with your partners on sort of getting that balance right between where to put our investments, where to put the marketing dollars and importantly, where to put people interms of coverage and being able to help customers make those transitions.

And I think we are going to make some adjustments to make sure we are in balance because when you make those changes in the summer, by the time it works its way through the system, you can see the impacts on whether you have that balance right. And so the teams are working through that. They're already making adjustments. And I expect, while, we will see some impact through H2 just because when you work through the scale motion, it can take some time for that to adjust.

The regular (non-AI) business for Azure performed around the bottom of the target range that they forecasted previously. This was flagged as a weakness by an analyst on the call and the answer was a bit entertaining from a tech sales perspective. Basically the weakness came from the channel business, which underperformed because the partners can’t organise a GTM motion that can scale well both on positioning AI workloads and still driving the existing business.

Satya Nadella: The enterprise workloads, whether it's SAP or whether it's VMware migrations, what have you, that's also in good shape. And it's just the scale place where Amy talked about this nuance, right, how do you really tweak the incentives, go-to-market at a time of platform shifts, you kind of want to make sure you lean into even the new design wins, and you just don't keep doing the stuff that you did in the previous generation. And that's the art form Amy was referencing to make sure you get the right balance. But let me put it this way.

You would rather win the new than just protect the past. And that's sort of another thing that we definitely will lean into always.

Probably the strongest point of view from Microsoft on how they do business is the constant drive to “win new rather than protect the past”. It’s a fundamental part of the DNA of their Enterprise sales motion, and the results speak for themselves.

Satya Nadella: In fact, we did a lot of the work on the inference optimizations on it and that's been key to driving, right. One of the key things to note in AI is you just don't launch the frontier model, but if it's too expensive to serve, it's no good, right. It won't generate any demand. So you've got to have that optimization, so that inferencing costs are coming down and they can be consumed broadly. And so that's the fleet physics we are managing.

And also, remember, you don't want to buy too much of anything at one time because the Moore's Law every year is going to give you 2x, your optimization is going to give you 10x. You want to continuously upgrade the fleet, modernize the fleet, age, the fleet, and at the end of the day, have the right ratio of monetization and demand-driven monetization to what you think of as the training expense. So I feel very good about the investment we are making and it's fungible and it just allows us to scale more long-term business.

During the call this was referred to as "fungible fleet". The idea is that a lot of the contracts that are being signed, are delivered over time. It's important to align improved hardware behind that delivery, so if an order is coming in 12 months for X capacity, the internal workflow would be to order that capacity with the new refreshed hardware while also working on aggressive software optimization strategy. This is partly why NVIDIA continues to dominate in new "record" business every quarter, because they are basically rolling out capacity that's been sold and scheduled literally years in advance, with the expectation of optimization benefits already priced in. That's partly why the DeepSeek market dump was literally free money for everybody who bought NVIDIA stock against the "bearish sentiment".

Satya Nadella: So we've always maintained that it's always good to have frontier models. You want to always build your application with high ambition using the best model that is available and then optimize from there on. So that's also another side like there's a temporality to it, right. What you start with as a given COGS profile doesn't need to be the end because you continuously optimize for latency and COGS and putting in different mode.

And in fact, all that complexity, by the way, has to be managed by a new app server. So one of the things that we are investing heavily on is foundry because from an app developer perspective, you kind of want to keep pace with the flurry of models that are coming in and you want to have an evergreen way for your application to benefit from all that innovation. But not have all the Dev cost or the DevOps cost or what people talk about AIOps costs. So we are also investing significantly in all the app server for any workload to be able to benefit from all these different models, open source, close source, different weight classes.

The "foundry" comment might seem offhanded for those that are not paying attention but it's critical in understanding the ecosystem benefit. Even if hardware profiles evolve and "demand is serviced with we already have", the improved cost profile from a COGS perspective is benefit also for the hyperscaler. The real moat is the software ecosystem that is built around making it easy for developers to constantly adopt new models and AI deployment frameworks, the so called foundry.

To put it in a dummy example, just because people buy less iPhones, doesn't mean that the AppStore is making less money.

Satya Nadella: Yes. I mean, I think the initial sort of set of seats were for places where there's more belief in immediate productivity, a sales team, in finance or in supply chain, where there is a lot of like, for example, SharePoint rounded data that you want to be able to use in conjunction with web data and have it produce results that are beneficial. But then what's happening, very much like what we have seen in the previous generation productivity things is that people collaborate across functions, across roads, right. For example, even in my own daily habit is, I go to chat, I use work tab, I get results, and then I immediately share using pages with colleagues. I sort of call it think with AI and work with people.

And that pattern then requires you to make it more of a standard issue across the enterprise. And so that's what we are seeing. It starts maybe at a department level. Quickly, the collaboration network effects will effectively demand that you spread it across. You can do it by cohort and what have you. And so what we've made it easier even is to start with Copilot Chat plus this. And so that gives the enterprise customers even more flexibility to have something that's more ubiquitous.

Satya likes to give practical little stories “from the trenches” in order to reinforce bigger themes. In this case it’s the idea that a lot of the upselling for Copilot seats and Agents comes from organic usage within the company, where once different users see how easy it is to use the software, even those that were not initially provisioned will start asking for capacity.

AWS

Andy Jassy: AWS grew 19% year over year and now has a $115 billion annualized revenue run rate. AWS is a reasonably large business by most folks' standards. And though we expect growth will be lumpy over the next few years as enterprises adopt and technology advancements impact timing, it's hard to overstate how optimistic we are about what lies ahead for AWS' customers and business.

I spent a fair bit of time thinking several years out. And while it may be hard for some to fathom a world where virtually every app is generative AI infused, with inference being a core building block just like compute, storage, and database, and most companies having their own agents that accomplish various tasks interact with one another, this is the world we're thinking about all the time. And we continue to believe that this world will mostly be built on top of the cloud with the largest portion of it on AWS. To best help customers realize this future, you need powerful capabilities of all three layers of the stack.

At this stage there is a strong realization that the fundamental shift in computing means that every application will use LLMs, ML or depend on other AI powered applications to operate (agents populating a spreadsheet for example). While Microsoft is predominantly monetizing right now its own products, Satya inferred about the server layer (foundry) as a critical part of their long term strategy. What Andy is outlining here is the core play of AWS, which is to provide the building blocks of the applications of tomorrow.

Andy Jassy: At the bottom layer, for those building models, you need compelling chips. Chips are the key ingredient in the compute that drives training and inference. Most AI compute has been driven by NVIDIA chips, and we obviously have a deep partnership with NVIDIA and will for as long as we can see into the future. However, there aren't that many generative AI applications of large scale yet, and when you get there, as we have with apps like Alexa and Rufus, cost can get steep quickly.

Customers want better price performance, and it's why we built our own custom AI silicon. Tranium 2 just launched at our AWS reInvent conference in December, E2 instances with these chips are typically 30 to 40 percent more price per form than other current GPU-powered instances available. That's very compelling at scale. Several technically capable companies like Adobe, Databricks, Poolside, and Qualcomm have seen impressive results in early testing of Tranium 2.

It's also why you're seeing Anthropic build its future frontier models on Tranium 2.

We're collaborating with Anthropic to build project right near. A cluster of training and two ultra-servers containing hundreds of thousands of training m two chips. This cluster is going to be five times the number of Exo-ZLofts as the cluster that Anthropic used to train their current leading set of cloud models. We're already hard at work on Training 3, which we expect to preview late in 25, and defining Training 4 thereafter.

Building outstanding performing chips that deliver leading price performance has become a core strength of AWS's, starting with our Nitro and Graviton chips in our core business, and now extending to Tranium and AI. It's something unique to AWS relative to other competing cloud providers. The other key component for model builders is services that make it easier to construct their models. I won't spend a lot of time on these comments on Amazon SageMaker AI, which has become the go-to service for AI model builders to manage their AIdata, build models, experiment, and deploy these models.

I'm still not sure that Trainium is really a viable differentiator. While Graviton resulted in significant practical savings for those that utilized that architecture, the majority of this production seems to be going to Anthropic (which at this stage might as well be considered a subsidiary of AWS) and an internal data center needs. If we look at the practical productivity of Anthropic, the biggest complaint of their service remains the quick rate limits that users reach on the platform.

In comparison, OpenAI has continuously increased the capacity available on their tiers, offering essentially unlimited intelligence capacity for its primary users. This is tied directly to the compute capacity available and we need to see measurable improvement in the service from Anthropic (and speed of deploying new models) for Trainium to be considered competitive.

Andy Jassy: Related, we also launched Amazon's own family of frontier models in Bedrock called Nova. These models compare favorably in intelligence against the leading models in the world to offer lower latency, lower price, about 75 percent lower than other models in Bedrock, and are integrated with key Bedrock features like fine-tuning, model distillation, knowledge base as a rag, and Agentec capabilities. Thousands of AWS customers are already taking advantage of Amazon Nova models' capabilities and price performance, including Palantir, SAP Densu Fortinet Trellix, and Robinhood, and we've just gotten started. At the top layer of the stack, Amazon Q is our most capable generative AI-powered assistant for software development and to leverage your own data.

You may remember that on the last call, I shared the very practical use case where Q transformation helps save Amazon Teams $260 million and 4500 developer years in migrating over 30,000 applications to new versions of the Java JDK. This is real value, and companies ask for more, which we obliged with our recent deliveries of Q transformation that enable moves from Windows dot net applications to Linux VMware to EC2, accelerates mainframe migrations. Early customer testing indicates the queue can turn was going to be a multi-year effort to do a mainframe migration into a multi-quarter effort, cutting by more than 50% the time to migrate mainframes. This is a big deal, and these transformations are good examples of practical AI.

Similar to the Azure call, we have a mention again of mainframes (the largest data estate left to migrate to cloud). This time, Amazon is focusing on training Amazon Q on being the most competent coding tool for migrating mainframes. Interesting, to say the least.

Here is the short pitch of why mainframes should move to cloud, courtesy of our AI friend Claude:

Mainframe to cloud migration benefits:

Cost Impact:

- 60-90% cost reduction according to AWS

- Eliminates mainframe licensing fees and vendor lock-in

- Shifts from CAPEX to pay-as-you-go model

Technical Advantages:

- Dynamic scaling vs. rigid mainframe capacity

- Enables DevOps and microservices adoption

- Development cycles accelerate by 40%

- Access to AI/ML tools limited by legacy COBOL

Critical Drivers:

- 72% of mainframe developers approaching retirement

- Cloud providers invest $1B+ annually in security

- 40-75% lower energy consumption per transaction

- Hybrid options available for gradual transition

Key Challenges:

- Refactoring costs can exceed $10M for large enterprises

- Regulatory compliance in finance/healthcare

- Migration downtime risks

- Legacy code integration complexity

67% of enterprises plan cloud migration by 2025, despite mainframes' continued strength in transaction processing.

Practically speaking, while the focus is on selling AI workloads, we should not ignore the second and third order effects of the technology on other opportunities. Unlocking the mainframe migration workload is a major multiplier for any tech sales rep or investor and will have significant downstream effects across the full cloud infrastructure software stack.

Andy Jassy: We signed new AWS agreements with companies, including Intuit, PayPal, Norwegian Cruise Line Holdings, Northrop Grumman, the Guardian Life Insurance Company of America, Reddit, Japan Airlines, Baker Hughes, the Hertz Corporation, Redfin, Chime Financial, Asana, and many others. Consistent customer feedback from our recent AWS Reinvent gathering was appreciation that we're still inventing rapidly in non-AI key infrastructure areas like storage, compute, database and analytics.

Our functionality leadership continues to expand, and there were several key launches customers were abuzz about, including Amazon Aurora DSQL, our new serverless distributed SQL database that enables applications with the highest availability, strong consistency, PostgreS compatibility, and 4 times faster reads and writes compared to other popular distributed SQL databases. Amazon S3 tables, which make S3 the first cloud object store with fully managed support for Apache Iceberg for faster analytics.

Amazon S3 Metadata, which automatically generates queryable metadata, simplifying data discovery, business analytics, and real-time inference to help customers unlock the value of their data in S3. And the next generation of Amazon SageMaker, which brings together all of the data, analytics services, and AI services into one interface to do analytics and AI more easily at scale.

Speaking of the ecosystem play, the usage of the internal data platform tools from AWS continues to increase, following a similar trend across all 3 hyperscalers.

Brian Olsavsky: We don't procure it unless we see significant signals of demand. And so when AWS is expanding its CapEx, particularly in what we think is one of these once-in-a-lifetime type of business opportunities like AI represents, I think it's actually quite a good sign medium to long-term for the AWS business. And I actually think that spending this capital to pursue this opportunity, which, you know, from our perspective, we think virtually every application that we know of today is gonna be reinvented with AI in inside of it.

And with inference being a core building block just like compute and storage and database, if you believe that plus that altogether new experiences that we've only dreamed about are gonna actually be available to us with AI, AI represents for sure the biggest opportunity since cloud probably the biggest technology shift and opportunity in business since the internet.

ONCE IN A LIFETIME. Coming from the CFO of Amazon, of all people.

Brian Olsavsky: It comes with our own big new launch of our own hardware and our own chips in Tranium two, which we just went to general availability at ReInvent, but the majority of the volume is coming in really over the next couple of quarters, the next few months. It comes in the form of power constraints where I think, you know, the world is still constrained on power from where I think we all believe we could serve customers if we were unconstrained.

There are some components in the supply chain like motherboards that are a little bit short in supply for various types of servers. So, you know, I think the team has done a really good job scrapping and providing capacity for our customers so they can grow.

The "power constraints" are a good way to address the real gap in the market - while chip production has ramped up aggressively, installing this capacity in data centers and ensuring they are allowed to be on the grid is increasingly difficult and requires multi-year planning. While the hyperscalers are trying to manage the expectations from the analysts that "we will catch up with the demand", this assumes that we don't reach another performance level in LLMs this year that turns the market on its head.

Andy Jassy: We also thought some of the inference optimizations they did were also quite interesting. For those of us who are building frontier models, we're all working on the same types of things, and we're all learning from one another. I think you have seen and will continue to see a lot of leapfrogging between us. There is a lot of innovation to come. And, you know, I think if you run a business like AWS, and you have a core belief like we do that virtually all the big generative AI apps are gonna use multiple model types and different customers are gonna use different models for different types of workloads.

You're gonna provide as many leading frontier models as possible for customers to choose from. That's what we've done with services like Amazon Bedrock, and it's why we moved so quickly to make sure that DeepSeek was available both in Bedrock and in SageMaker. You know, faster than you saw from others. And we already have customers starting to experiment with that. I think what's, you know, one of the interesting things over the last couple of weeks is sometimes people make the assumptions that if you're able to decrease the cost of any type of technology component, in this case, we're really talking about inference, it's somehow it's gonna lead to less total spend in technology, and we just, we have never seen that to be the case.

You know, we did the same thing in the cloud where we launched AWS in 2006, where we offered S3 object storage for fifteen cents a gigabyte and compute for ten cents an hour, which, of course, is much lower now, many years later. People thought that people would spend a lot less money in the on infrastructure technology. What happens is companies will spend a lot less per unit of infrastructure, and that is very, very useful for their businesses, but then they get excited about what else they could build that they always thought was cost prohibitive before. And they usually end up spending a lot more in total on technology once you make the per-unit cost less.

Since DeepSeek remains top of mind in the industry, Andy had to explain how from the perspective of AWS, they are fine with constantly improved unit economics, as long as the overall business grows. This should be obvious but unfortunately most still don’t understand the actual drivers behind AWS’s success. The goal is to win as much as possible of the long term workloads of the customer - if that means running DeepSeek on potato hardware, that’s what they’ll offer them.

Andy Jassy: Yeah. Okay. Well, on the robotics piece, what I would tell you is, you know, since we've been pretty substantially integrating robotics into our fulfillment network over the last many years, we have seen cost savings, and we've seen productivity improvements and we've seen safety improvements. And so we have already gotten a significant amount of value out of our robotics innovations. What we've seen recently, and I think maybe part of what you're referencing in Shreveport, is that the next tranche of robotics initiatives have started hitting production.

And we've put them all together for the first time as part of an experience in our Shreveport facility, and we are very, very encouraged by what we're seeing there both by the speed improvements that we're seeing, the productivity improvements, the cost to serve improvements. You know, it's still relatively early days, and these all being put together are only in Shreveport at this point. But we have plans now to start to expand that and roll that out to a number of other facilities in the network, some of which will be our new facilities and others of which will retrofit existing facilities to be able to use those same robotics innovations.

I'll also tell you that this group of, call it, half dozen or so new initiatives is not close to the end of what we think is possible with respect to being able to use robotics to improve productivity cost to serve a safety in our fulfillment network.

And we have a kind of next wave that we're starting to work on now. But I think this will be a many-year effort as we continue to tune different parts of our fulfillment network where we can use robotics. And we actually don't think there are that many things that we can't improve the experience with robotics. On your other question, which is about how we might use AI in other areas of the business than AWS, maybe more in, I think you asked about our retail business.

I previously went over Tesla’s play into humanoid robots as the primary technology that would power factories in the future. If there is a US company that has a chance at competing with that vision, that would be Amazon (with Bezos behind the project). The Shreveport facility is a good example of what is possible based on today’s hardware and software.

Andy Jassy: The way I would think about it is that there's kind of two macro buckets of how we see people, both ourselves inside Amazon, as well as other companies using AWS, how we see them getting value out of AI today. The first macro bucket, I would say, is really around productivity and cost savings. And, in many ways, this is the lowest hanging fruit in AI. And you see that all over the place in our retail business.

For instance, if you look at customer service, and you look at the chatbot that we've built, we completely rearchitected it with generative AI. It's delivering; it already had pretty high satisfaction. It's delivering 500 basis points better satisfaction from customers with the new generative AI-infused chatbot. If you look at our millions of third-party selling partners, one of their biggest pain points is because we put a high premium on really organizing our marketplace so that it's easy to find things, there's a bunch of different fields you have to fill out when you're creating a new product detail page.

But we've built a generative AI application for them. Where they can either fill in just a couple of lines of text or take a picture of an image or point to a URL, and the generative AI app will fill in most of the rest of the information they have to fill out, which speeds are getting selection on the website, easier for sellers. If you look at how we do inventory management, try and understand what inventory we need in what facility at what time, the generative AI applications we've built there have led to 10% better forecasting on our part, 20% better regional predictions. Our robotics, we were just talking about the brains in a lot of those robotics, are generative AI-infused that do things like tell the robotic claw, you know, what's in a bin, what it should pick up, how it should move it, where it should place it in the in the other bin that it's filling.

So it's really in the brains of most of our robotics. So we have a number of very significant, I'll call it, productivity and cost savings efforts in our retail business. They're using generative AI, and again, it's just a fraction of what we have going. I'd say the other big macro bucket are really altogether new experiences. And, again, you see lots of those in our retail business ranging from Rufus, which is our AI-infused shopping assistant, which continues to grow very significantly.

To things like Amazon Lens, where you can take a picture of a product that's in front of you, check it out in the app. You can find it in the little box at the top. You take a picture of an item in front of you, and it uses computer vision generative AI to pull up the exact item in a search result. To things like sizing where we basically have taken the catalogs of all these different clothing manufacturers and compared them against one another so we know which brands tend to run big or small relative to each other.

So when you come to buy a pair of shoes, for instance, they can recommend what size you need, even what we're doing in Thursday Night Football where we're using generative AI for really inventive features like the sense of alerts where we predict which player is gonna put the quarterback or vulnerabilities where we're able to show viewers what area the field is vulnerable. So we're using it really all over our retail business and all the businesses in which we're in. We've got about a thousand different generative AI applications we've either built or in the process of building right now.

While Satya talks about $13B in ARR, Andy likes focus on the general advantage of AWS and the thousand internal applications they are working on. This still reflects on the relative weakness of their AI positioning in Enterprise and why I believe that a more aggressive move towards acquiring Anthropic and offering Claude as an AWS-only service is the most logical (if out of character) move here.

GCP

Sundar Pichai: We delivered another strong quarter in Q4, driven by our leadership in AI and our unique full stack. We're making dramatic progress across compute, model capabilities, and in driving efficiencies. We're rapidly shipping product improvements, and seeing terrific momentum with consumer and developer usage. And we're pushing the next frontiers, from AI agents, reasoning and deep research, to state-of-the-art video, quantum computing and more.

The company is in a great rhythm and cadence, building, testing, and launching products faster than ever before. This is translating into product usage, revenue growth, and results. In Search, AI overviews are now available in more than 100 countries. They continue to drive higher satisfaction in Search usage. Meanwhile, circle to search is now available on over 200 million android devices. In Cloud and YouTube, we said at the beginning of 2024 that we expected to exit the year at a combined annual revenue run rate of over $100 billion.

We met that goal and ended the year at a run rate of $110 billion. We are set up well for continued growth. So today I'll provide an update on our AI progress and how it's improving our core consumer products.

As usual, the Alphabet call includes a lot of smoke and mirrors, a reflection of the weak leadership on top. Positioning GCP and YouTube as a combined metric is a very weird choice, as if smartphone reviews and complex Big Query implementations are just "another source of revenue for us".

To be more precise, GCP had its weakest Net New ARR Q4 in two years, before even applying CC (constant currency) which typically leads to lower growth estimates.

Sundar Pichai: First, AI Infrastructure. Our sophisticated global network of cloud regions and data centers provides a powerful foundation for us and our customers, directly driving revenue. We have a unique advantage, because we develop every component of our technology stack, including hardware, compilers, models, and products. This approach allows us to drive efficiencies at every level, from training and serving, to developer productivity

In 2024, we broke ground on 11 new cloud regions and data center campuses in places like South Carolina, Indiana, Missouri, and around the world. We also announced plans for seven new subsea cable projects, strengthening global connectivity. Our leading infrastructure is also among the world's most efficient. Google data centers deliver nearly 4x more computing power per unit of electricity compared to just 5 years ago.

These efficiencies, coupled with the scalability, cost and performance we offer, are why organizations increasingly choose Google cloud's platform. In fact, today, cloud customers consume more than 8x the compute capacity for training and inferencing compared to 18 months ago. We'll continue to invest in our cloud business to ensure we can address the increase in customer demand.

Infrastructure as part of an integrated stack has always been a core strength for GCP and the highlight of this call was related to the significant developer adoption they are seeing. Still, representing significant “capacity growth” vs 18 months ago when Google was caught badly mispositioned with Enterprise AI adoption and remains significantly lagging is, well, misleading.

Sundar Pichai: In December, we unveiled Gemini 2.0, our most capable AI model yet, built for the agentic era. We launched an experimental version of Gemini 2.0 flash, our workhorse model with low latency and enhanced performance. Flash has already rolled out to the Gemini app, and tomorrow we are making 2.0 flash generally available for developers and customers, along with other model updates. So stay tuned. Late last year, we also debuted our experimental Gemini 2.0 Flash Thinking Model.

The progress-to-scale thinking has been super fast, and the reviews so far have been extremely positive. We are working on even better thinking models and look forward to sharing those with the developer community soon. Gemini 2.0 advances in multi modality and made of tool use enable us to build new agents that bring us closer to our vision of a universal assistant.

One early example is Deep Research. It uses agentic capabilities to explore complex topics on your behalf, and give key findings, along with sources. It launched in Gemini Advanced in December, and is rolling out to android users all over the world. We are seeing great product momentum with our consumer Gemini app, which debuted on IOS last November. And we have opened up trusted tester access to a handful of research prototypes, including Project Mariner, which can understand and reason across information on a browser screen to complete tasks and Project Astra.

We expect to bring features from both to the Gemini app later this year. We're also excited by the progress of our video and image generation models. Veo 2, our state-of-the-art video generation model, and Imagen 3, our highest quality text-to-image model.

These generative media models, as well as Gemini, consistently top industry leader boards and score top marks across industry benchmarks. That's why more than 4.4 million developers are using our Gemini models today, double the number from just 6 months ago.

The interesting thing with Gemini is that the models do indeed score reasonably high on benchmarks and have some of the best cost to value ratios. For existing GCP customers, they are being adopted steadily for certain use cases.

The challenge is the lack of public recognition or interest. At the end of the day, adoption starts with curiosity, and there is simply a lack of enthusiasm for the first-party products that Google is putting on the market. The obvious upsell for those should be within the many companies that currently adopt the Google Workspace, however we are seeing very little traction compared to the superb execution from Microsoft.

In order to get better publicity, a lot of investment went into positioning Gemini as a central part of the Android ecosystem of consumer products. Unfortunately this has resulted in a rather negative perception of the brand, as review after review blasts the latest “gimmicky AI” features, which leads many users to assume that the same lack of value will carry over into the Enterprise offerings.

Sundar Pichai: Third, our Products and Platforms put AI into the hands of billions of people around the world. We have seven Products and Platforms with over 2 billion users and all are using Gemini. That includes Search, where Gemini is powering our AI overviews. People use Search more with AI overviews and usage growth increases over time as people learn that they can ask new types of questions. This behavior is even more pronounced with younger users who really appreciate the speed and efficiency of this new format.

We also are pleased to see how Circle to Search is driving additional Search use and opening up even more types of questions. This feature is also popular among younger users. Those who have tried Circle to Search before now use it to start more than 10% of their searches. As AI continues to expand the universe of queries that people can ask, 2025 is going to be one of the biggest years for Search innovation yet.

This brings us to the biggest weakness for GCP - the fact that the business unit will “forever” remain just a second rate part of the portfolio, never to be taken as seriously as the search and ads business of Google. The majority of the earnings call was spent discussing “AI overviews” that can supplement ads and how Circle to Search is being utilized on Android phones.

So while Enterprise customers of Azure are deploying 400k custom agents, Sundar is bragging about “the younger users” getting more ads on their phones.

Sundar Pichai: Now, let me turn to key highlights from the quarter across Cloud, YouTube, Platforms and Devices, and Waymo. First, Google Cloud. Our AI-powered cloud offerings enabled us to win customers such as Mercedes-Benz, Mercado Libre and Servier. In 2024, the number of first-time commitments more than doubled, compared to 2023. We also deepened customer relationships. Last year, we closed several strategic deals over $1 billion, and the number of deals over $250 million doubled from the prior year.

Our partners are further accelerating our growth, with customers purchasing billions of dollars of solutions through our Cloud marketplace. We continue to see strong growth across our broad portfolio of AI-powered Cloud solutions. It begins with our AI Hypercomputer, which delivers leading performance and cost, across both GPUs and TPUs.

These advantages help Citadel with modeling markets and training, and enabled Wayfair to modernize its platform, improving performance and scalability by nearly 25%.

In Q4, we saw strong uptake of Trillium, our sixth-generation TPU, which delivers 4x better training performance and 3x greater inference throughput compared to the previous generation. We also continue our strong relationship with NVIDIA. We recently delivered their H200-based platforms to customers and just last week, we were the first to announce a customer running on the highly-anticipated Blackwell platform. Our AI developer platform, Vertex AI, saw a 5x increase in customers year-over-year, with brands like Mondelez International and WPP building new applications and benefitting from our 200+ foundation models.

This is the highlight of the year for GCP - scaling the commitments across several key customers and getting some of those flagship deals in. Now whether they are really getting the right experience out of the role is a different topic, as The Information recently reported that cost-cutting across all areas is the default for GCP:

Kurian’s bean counting has extended to other areas, including sales. In recent years, he has capped commissions for its sales teams, which he had tripled in size after he first got to the company, and set limits on the discounts its resellers can provide to customers to close deals. (Google Cloud’s overall investment in resellers has increased under Kurian, and it has boosted funding to partners that provide consulting services for projects involving its artificial intelligence services, said a person close to Google Cloud.)

Google Cloud replaced this year’s annual sales kickoff meeting, where Kurian and other Google Cloud leaders typically outline priorities for the coming year, with a series of regional events that include AI-focused sales training for sales staff, according to a Google Cloud spokesperson. Google Cloud has previously held its kickoff meetings in places like Las Vegas and London.

RepVue is a bit mixed, but the tensions internally are well documented:

Source: RepVue

Anat Ashkenazi: We do see and have been seeing very strong demand for AI products in the fourth quarter in 2024. We exited the year with more demand than we had available capacity. So we are in a tight supply demand situation, working very hard to bring more capacity online. As I mentioned, we've increased investment in CapEx in 2024, continue to increase in 2025, and will bring more capacity throughout the year.

The capacity constraint is showing up again, in a recurring story across the industry.

Sundar Pichai: Ross, like, look, the whole TPU project started, V1 was effectively an inferencing chip, so we've always, part of the reason we have taken the end-to-end stack approach is that, so that we can definitely drive a strong differentiation in end-to-end optimizing, and not only on a cost, but on a latency basis and a performance basis. We have the Pareto frontier we mentioned, and I think our full-stack approach and our TPU efforts all play -- give a meaningful advantage, and we plan, you already see that. I know you asked about the cost, but it's effectively captured. When we price outside, we pass on the differentiation.

It's partly why we've been able to bring forward flash models at very attractive value props, which is what is driving both developer growth. We've doubled our developers to 4.4 million in just about 6 months. Vertex usage is up 20x last year. And so all of that is a direct result of that approach and so we will continue doing that.

To conclude, similar to AWS and Azure, a key part of the strategy would be to provide an integrated ecosystem targeting the developers who will onboard new use cases on the platforms. Right now the goal of GCP is to provide best-in-class infrastructure at a reduced cost vs the competition, with the hope that this will bring in sufficient adoption. The strategy seems to be working from an adoption perspective but we are yet to see actual reported figures of the AI-related ARR for GCP.

We do know that they have now separated a group within GCP to drive improved outcomes, so I would like to see some more concrete results in order to make a distinction between whether they are simply riding an industry-wide adoption wave or have a meaningful edge.